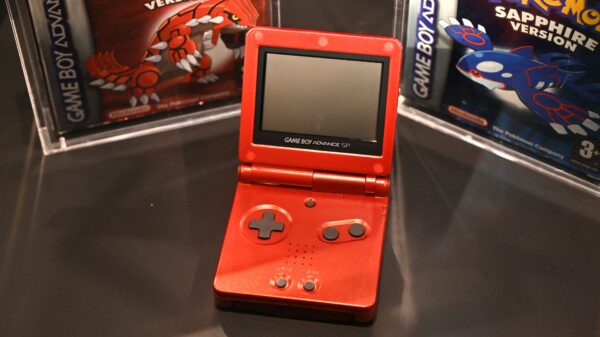

In a surprising twist within the AI community, major players such as Google, OpenAI, and Anthropic have begun utilizing retro video games, particularly Pokémon, as a benchmark to assess their AI models. According to a recent report by the Wall Street Journal, this unconventional approach aims to provide a more nuanced evaluation of AI capabilities compared to traditional tests like Pong.

David Hershey, the applied AI lead at Anthropic, emphasized the complexity of Pokémon, stating, “The thing that has made Pokémon fun and that has captured the [machine learning] community’s interest is that it’s a lot less constrained than Pong or some of the other games that people have historically done this on. It’s a pretty hard problem for a computer program to be able to do.” This nuanced gameplay allows researchers to delve deeper into the decision-making processes of AI models.

The initiative began last year when Anthropic’s AI model, Claude, was showcased on a Twitch stream titled “Claude Plays Pokémon.” Hershey’s role involves not just deploying AI technology, but also employing innovative tests to evaluate model performance. Claude’s gaming exploits have since inspired similar initiatives, including “Gemini Plays Pokémon” and “GPT Plays Pokémon,” with official backing from both Google and OpenAI.

Both Gemini and GPT have successfully completed Pokémon Blue, prompting them to tackle its sequels. In contrast, Claude has yet to achieve this milestone, currently working through the challenges of the Pokémon game on its streaming platform with its latest Opus 4.5 model. The endeavor serves as a means of informal evaluation, allowing AI researchers to measure performance quantitatively through gameplay.

Hershey noted that employing Pokémon as a test environment offers significant advantages: “It provides [us] with, like, this great way to just see how a model is doing and to evaluate it in a quantitative way.” The game requires players to level up, train their Pokémon, and capture new ones by defeating gym leaders, involving complex decision-making that tests AI’s logical reasoning and long-term planning abilities.

In Pokémon, players face choices that may involve risks, such as battling a powerful trainer for their Pokémon or focusing on improving their existing team. For human players, such decision-making is intuitive, yet for AI, it represents a formidable challenge in logical reasoning and risk assessment, key components in evaluating overall progress.

Hershey shares insights gained from these gaming sessions with clients, refining the “harness” around AI models designed for specific tasks. The harness functions as the software framework that effectively allocates the model’s resources to meet particular task requirements. The insights drawn from Pokémon gameplay can translate into real-world applications, particularly in optimizing computational efficiency for customers.

As the ambitions of Big Tech move towards achieving artificial general intelligence (AGI), the nature of inference is shifting from simple responses to long-term, strategic progress. Pokémon serves as an ideal testing ground since finishing the game necessitates winning the Pokémon League, a process demanding sequential steps that assess AI’s strategic planning and resource management skills. Such gameplay scenarios allow for objective performance metrics, contrasting with more subjective evaluations.

In a parallel exercise, various AI models were tasked with recreating a version of Minesweeper, where OpenAI’s Codex emerged as the victor, while Google’s Gemini failed to produce a playable game. The transition to a complex RPG like Pokémon marks a significant escalation in the criteria for assessing AI capabilities. As AI continues to evolve, the methods employed in testing will likely adapt, contributing to a deeper understanding of machine learning’s potential.

As the AI landscape evolves, the integration of sophisticated gameplay into model assessments could play a pivotal role in shaping future developments. By embracing unconventional benchmarks, researchers might uncover new insights into the capabilities and limitations of AI, fostering a richer understanding of machine learning’s trajectory.

See also Cal Al-Dhubaib Drives Ethical AI Adoption for Top Ohio Brands Post-Pandata Acquisition

Cal Al-Dhubaib Drives Ethical AI Adoption for Top Ohio Brands Post-Pandata Acquisition ChatGPT Saves Users Up to 15% on Shopping Compared to Perplexity in Price Hunt

ChatGPT Saves Users Up to 15% on Shopping Compared to Perplexity in Price Hunt Mid-Market Adoption of AI by 90% in 2026 Will Drive Economic Growth and Productivity Gains

Mid-Market Adoption of AI by 90% in 2026 Will Drive Economic Growth and Productivity Gains Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere