By Jenny Lee (January 26, 2026, 06:44 GMT | Insight) — Grok, the generative AI chatbot developed by xAI, a company owned by Elon Musk, is facing preliminary scrutiny from South Korea’s privacy watchdog. The review was prompted by allegations that Grok has been used to generate and disseminate sexually exploitative deepfake images, some of which reportedly involve minors. This controversy has raised significant concerns about the ethical implications of generative artificial intelligence and its potential misuse.

The South Korean Personal Information Protection Commission (PIPC) is currently evaluating the chatbot’s operational framework and its compliance with local data protection laws. The emerging scrutiny reflects a growing global apprehension regarding the exploitation of AI technologies, particularly concerning the creation of harmful content. Reports indicate that Grok’s capabilities in generating realistic images and text may have been exploited to create deepfake material that violates ethical standards and legal boundaries.

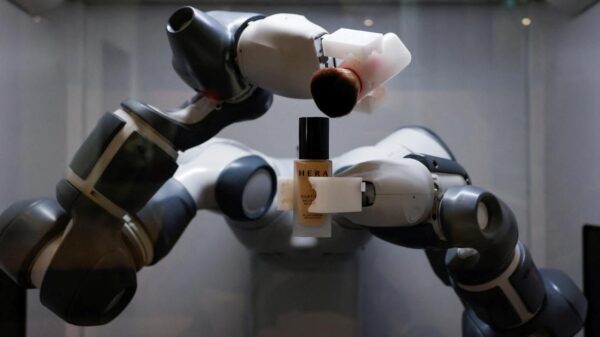

In recent years, the use of generative AI has surged, with various applications ranging from artistic creation to personalized virtual assistants. However, as tools like Grok become more sophisticated, they also present new challenges for regulators and society. The ability to create hyper-realistic images and media has led to increasing calls for stricter governance and monitoring of AI technologies to prevent the spread of harmful content.

The implications of this case extend beyond South Korea. Other countries are also grappling with how to regulate AI technologies effectively. For instance, the European Union has proposed comprehensive regulations aimed at governing AI development and deployment, emphasizing the need for accountability and transparency in AI systems. Meanwhile, the United States is also considering various legislative measures to address the ethical use of AI.

Experts in the field of AI ethics argue that regulatory frameworks should not only focus on the technical capabilities of AI tools but also consider the potential societal impacts. They advocate for a proactive approach that involves collaboration between tech companies, regulators, and civil society to develop standards that protect individuals while fostering innovation. This issue is particularly urgent in light of the rapid advancement of generative AI technologies, which can be misused for malicious purposes.

The controversy surrounding Grok has sparked debates about the responsibilities of AI developers in prioritizing ethical considerations in their products. As companies like xAI continue to push the boundaries of generative AI, there is a pressing need for industry leaders to establish ethical guidelines that govern the use of their technologies. The challenge will be to balance innovation with the fundamental responsibility to prevent harm.

In the wake of these developments, xAI has not yet released a statement addressing the allegations against Grok. Analysts speculate that the company may need to enhance its internal governance structures and engage with regulatory bodies to navigate the evolving landscape of AI regulation. The outcome of the PIPC’s review could set a precedent for how generative AI is perceived and regulated both in South Korea and globally.

As the discourse on AI ethics and regulation unfolds, it is crucial for stakeholders to remain vigilant and informed. The case highlights the urgent need for a collaborative approach in addressing the dual-edged nature of AI technologies. As tools continue to evolve and their applications expand, the conversation surrounding responsible AI usage will become increasingly significant.

For more details on this ongoing issue and its broader implications for the technology industry, stakeholders are encouraged to follow updates from relevant regulatory bodies and expert analyses in the field.

For further information, visit xAI, the Personal Information Protection Commission of South Korea, and resources from the European Commission.

See also Chinese AI Models Capture 15% Global Share as Alibaba’s Qwen Tops 700M Downloads

Chinese AI Models Capture 15% Global Share as Alibaba’s Qwen Tops 700M Downloads Three Evidence-Based Strategies to Bridge AI and Physician Disagreements in Healthcare

Three Evidence-Based Strategies to Bridge AI and Physician Disagreements in Healthcare AI Transforms Culture Change: Real-Time Nudges Drive 34% Performance Boost

AI Transforms Culture Change: Real-Time Nudges Drive 34% Performance Boost Anthropic’s Amanda Askell Explores AI Consciousness Debate on “Hard Fork” Podcast

Anthropic’s Amanda Askell Explores AI Consciousness Debate on “Hard Fork” Podcast Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere