Armor, a prominent provider of cloud-native managed detection and response (MDR) services, has issued a critical advisory for enterprises navigating the rapid integration of artificial intelligence (AI) tools into their operations. On January 28, 2026, the Dallas-based company emphasized that organizations deploying AI without formal governance policies are increasingly vulnerable to security threats, potential data loss, and compliance violations. With a footprint protecting over 1,700 organizations across 40 countries, Armor’s guidance aims to preemptively address these emerging risks.

“If your organization is not actively developing and enforcing policies around AI usage, you are already behind,” stated Chris Stouff, Chief Security Officer at Armor. He highlighted that unclear rules regarding data management, tool usage, and accountability could lead to significant compliance liabilities as the attack surface expands and traditional security measures prove inadequate.

As enterprises adopt AI in various operational areas, from customer service to software development, security teams face the challenge of establishing a governance framework that balances innovation with risk management. Armor’s team of security experts identified several pressing concerns related to this governance gap. One major issue is the potential for data loss, as employees may input sensitive corporate information into public AI tools, breaching data handling policies and exposing intellectual property.

Another concern is the rise of “shadow AI,” where unapproved AI tools proliferate within business units without adequate visibility from IT or security teams. This ungoverned adoption can create data flows that lead to compliance violations, often only discovered during audits or security incidents. Furthermore, existing AI policies frequently exist in isolation, failing to integrate with established governance, risk, and compliance (GRC) frameworks. This lack of integration hinders organizations’ ability to demonstrate AI governance to auditors, regulators, or customers, increasing their overall risk.

Regulatory pressure is also mounting, particularly as new AI regulations arise globally, including the EU AI Act and sector-specific requirements in industries like healthcare and finance. Organizations are finding themselves unprepared to meet these regulatory demands as AI adoption accelerates.

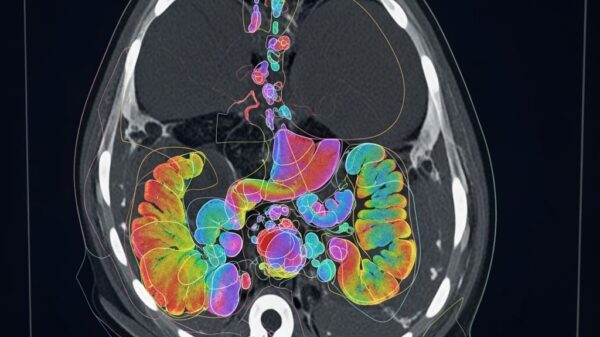

The stakes are particularly high for healthcare organizations and HealthTech companies, where adherence to the Health Insurance Portability and Accountability Act (HIPAA) is critical. Policies must clarify what data can be processed, the appropriate channels for its use, validation of AI-generated outputs, and accountability regarding decision-making. Mismanagement of protected health information shared with AI tools could trigger breach assessment requirements, while inaccuracies in AI-generated clinical documentation raise questions about compliance and liability.

“Healthcare organizations are under enormous pressure to adopt AI for everything from administrative efficiency to clinical decision support,” said Stouff. “But the regulatory environment has not caught up, and the security implications are significant.” He stressed the need for robust policies defining permissible data use with specific AI tools, validation mechanisms for outputs, and clarity on accountability when issues arise.

In response to these challenges, Armor is launching an AI governance framework designed to equip organizations with the necessary tools for transparency, accountability, and effective risk management. This framework comprises five core pillars aimed at addressing the governance gap:

1. AI Tool Inventory and Classification: Identify AI tools in use across the organization, including both approved and unapproved tools, and assess them based on risk levels.

2. Data Handling Policies: Develop explicit guidelines on which categories of data can be used with specific AI tools, particularly focusing on personally identifiable information (PII), protected health information (PHI), financial data, and intellectual property.

3. GRC Integration: Incorporate AI governance into existing compliance frameworks to ensure audit readiness and alignment with regulatory expectations.

4. Monitoring and Detection: Establish technical controls to detect unauthorized AI tool usage and potential data exfiltration, integrating these measures with existing security monitoring systems.

5. Employee Training and Accountability: Create tailored training programs that inform employees about AI-related risks and establish clear accountability structures for violations.

Armor continues to emphasize the importance of proactive governance in AI adoption, urging organizations to take immediate action to mitigate risks associated with AI use. By doing so, enterprises can safeguard sensitive data and comply with evolving regulatory requirements, ultimately fostering a more secure operational environment.

For more information, visit armor.com.

See also State-Led Crackdown Targets Grok and xAI Amid Rising Age Verification Laws

State-Led Crackdown Targets Grok and xAI Amid Rising Age Verification Laws UK’s AI Boom Risks 8 Million Jobs as Service Sector Faces Automation Wave

UK’s AI Boom Risks 8 Million Jobs as Service Sector Faces Automation Wave Invest in Alphabet: The Tech Titan Poised to Dominate Quantum Computing’s Next Frontier

Invest in Alphabet: The Tech Titan Poised to Dominate Quantum Computing’s Next Frontier Yahoo Launches Scout, New AI Search Engine, to Compete with Google and ChatGPT

Yahoo Launches Scout, New AI Search Engine, to Compete with Google and ChatGPT Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere