As the landscape of internet usage evolves with the rise of AI technologies, safety concerns regarding user interactions with online content have taken center stage. Companies like OpenAI are stepping up to address these issues as users increasingly shift from traditional web browsers to AI-driven agents for tasks such as browsing and email management. In a recent blog post, OpenAI outlined the mechanisms its AI agents employ to navigate the complex web environment, particularly in light of threats like phishing and malicious links.

OpenAI’s approach focuses on maintaining a balance between user experience and safety. While a straightforward solution might involve using a curated list of trusted websites, the company argues that this would be overly restrictive. Instead, OpenAI has developed an independent web index, which catalogs public URLs that exist on the internet without relying on user-specific data. This allows AI agents to access URLs that are deemed safe, while notifying users when a potentially unverified link is encountered.

The implications of this strategy are significant. Users can be assured that if a URL is part of the independent index, the AI agent can access it without triggering any red flags. Conversely, if a URL is not listed, users receive a warning requesting their permission to proceed. According to OpenAI, this shifts the security focus from a generalized trust in websites to a more granular assessment: “Has this specific address appeared publicly on the open web in a way that doesn’t depend on user data?”

However, experts caution that this system is not foolproof. As highlighted in OpenAI’s post, the independent web index serves as only one layer of security. The nature of the internet allows for sophisticated methods of deception, such as social engineering, which AI agents may not readily recognize. This raises the specter of prompt injection attacks, where malicious pages could manipulate AI models to retrieve sensitive information or compromise user security.

Despite these challenges, OpenAI remains committed to refining its AI systems to bolster user safety. This commitment is particularly critical as more users adopt AI technologies for various tasks, ranging from simple information retrieval to complex decision-making processes. The evolving nature of the internet necessitates a proactive approach to cybersecurity, especially as AI agents become commonplace in everyday online interactions.

In a broader context, these developments reflect the industry’s growing recognition of the need for enhanced security measures in AI applications. As AI technologies continue to mature, there is an imperative for companies to prioritize user safety, ensuring that as they innovate, they also mitigate the risks that come with new capabilities. For OpenAI, the challenge lies not only in the technical implementation of security features but also in the user education necessary to navigate an increasingly complex web landscape.

As the dialogue around AI safety evolves, companies like OpenAI are likely to face intensified scrutiny from both users and regulatory bodies. The balance between fostering innovation and ensuring security will be a defining feature of the AI landscape in the coming years. As OpenAI and its counterparts continue to develop their technologies, the question remains: how effectively will they address the inherent risks of an interconnected digital world?

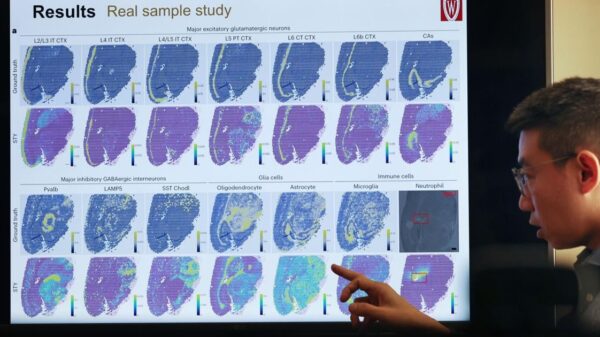

See also Wisconsin Universities Lead AI Innovation with Groundbreaking Research and Collaborations

Wisconsin Universities Lead AI Innovation with Groundbreaking Research and Collaborations OpenAI Reappoints Barret Zoph to Combat 2026 Enterprise Market Share Decline

OpenAI Reappoints Barret Zoph to Combat 2026 Enterprise Market Share Decline Music Publishers Sue Anthropic for $3 Billion Over 20,000 Pirated Songs

Music Publishers Sue Anthropic for $3 Billion Over 20,000 Pirated Songs Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT