The global competition to shape artificial intelligence (AI) regulation has intensified, highlighted by the European Union’s passage of the comprehensive AI Act in 2024. This landmark legislation represents a significant milestone in the governance of AI, though recent trends indicate a cautious approach to regulation in the interest of fostering innovation. The updated Global AI Law and Policy Tracker reveals that many nations are actively engaging in discussions around effective policies, exploring governance models as the opportunities and challenges of AI investments unfold.

While the EU contemplates pausing certain aspects of its AI Act’s implementation, several countries are advancing their own legislative efforts. South Korea finalized its AI Framework Act in January 2025, enhancing transparency and safety requirements while providing support for research and workforce readiness. Japan followed suit with the AI Promotion Act in May 2025, which encourages corporate cooperation with government safety measures and enables the disclosure of companies that misuse AI in ways that infringe on human rights. In China, the newly enacted AI Labeling Rules obligate service providers to label AI-generated content explicitly and implicitly, in accordance with existing regulations.

The list of draft AI legislation is extensive. Argentina is considering a Bill on Personal Data Protection in AI Systems that seeks to expand protections beyond its existing data laws. Similarly, India’s proposed Digital India Act aims to modernize its regulatory framework concerning cyberspace and AI-generated content. Brazil has introduced Bill no. 2338/2023, which aims to establish a risk-based approach for high-risk systems, while Vietnam’s Draft Law on AI emphasizes human-centered management and regulatory distinctions based on an entity’s role in the AI supply chain.

Beyond direct AI legislation, countries like Australia are amending existing laws. The updates to Australia’s Privacy Act regulate automated decision-making disclosures, while the U.K. has passed the Data (Use and Access) Act to clarify the lawful use of personal data for scientific research and to encourage innovation.

The Rise of AI Hubs

As nations set regulatory frameworks, many are simultaneously crafting policies to attract investment in AI infrastructure. Chile has emerged as a leader in Latin America, expanding its data center capacity and subsea fiber-optic networks while fostering local AI startups. On the opposite side of the continent, Brazil plans a substantial USD 4 billion investment in AI projects, infrastructure, and workforce training.

Gulf states are also positioning themselves as AI hubs. The United Arab Emirates is developing a robust startup ecosystem and advanced supercomputing resources, including a partnership with OpenAI to create frontier-scale computing capacity. Saudi Arabia aims to leverage its youthful population and centralized governance to become a major exporter of data and AI by 2030. South Korea is also making strides, establishing the AI Open Innovation Hub and planning to build the world’s highest-capacity AI data center.

Deregulatory signals are surfacing as well. The EU may postpone parts of the AI Act, as indicated in a Digital Omnibus on AI Regulation Proposal released by the European Commission in November 2025. This proposal highlights challenges such as delays in appointing competent authorities and the need for harmonized standards. It includes suggested amendments aimed at easing compliance for small- and medium-sized enterprises, while also enhancing the oversight powers of the AI Office.

Australia’s Productivity Commission has cautioned against over-regulation that could deter investment, underscoring the need to achieve regulatory goals with minimal disruption to innovation. In Canada, the Competition Bureau has similarly warned that overly specific AI regulations could stifle growth and create entry barriers for startups. The U.S. is actively pursuing a deregulatory agenda, with recent executive orders aimed at removing barriers to AI development as part of a broader AI Action Plan.

In addition to formal legislation, operational standards are filling gaps where binding laws are absent. Canada’s government has created the AI and Data Standardization Collaborative to develop standards that promote safety and innovation. Australia’s voluntary AI Safety Standard lays out guidelines for responsible AI development, while nations like China and India are working on their own standards to enhance generative AI security and reliability.

Copyright issues regarding the use of copyrighted data for AI training are a contentious area of law. Recent developments include public consultations in Hong Kong to assess possible updates to copyright laws for computational analysis. A U.S. District Court ruling found that training AI on copyrighted works may qualify as fair use, provided the works are legally obtained. Japan has amended its Copyright Act to allow AI training on copyrighted material, and Israel has issued guidelines permitting the use of such material for machine learning.

International collaboration on AI governance is also on the rise. Singapore has taken a leading role in creating interoperability between governance frameworks in agreements with the U.S., Australia, and the EU. Brazil has engaged with France to bolster cooperation in data protection and digital education, while Canada has joined G20 nations in formulating principles for AI in telecommunications. The U.K. and Qatar are also enhancing their research partnerships in this area.

Despite emerging deregulatory trends, the landscape of AI law and policy is dynamic, reflecting a mix of comprehensive legislation, evolving standards, and international collaboration. As the global community navigates the complexities of this transformative technology, vigilance in policy-making and a commitment to cooperation will be essential for harnessing the benefits of AI while mitigating its risks. For ongoing updates, the IAPP’s Global AI Law and Policy Tracker offers a comprehensive overview of these developments.

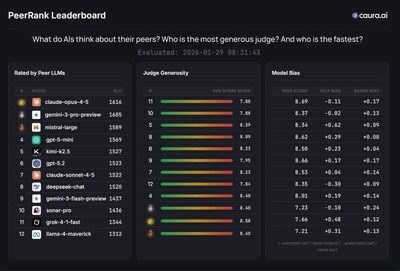

See also AI Models Self-Evaluate: Caura.ai’s PeerRank Framework Exposes Systematic Biases in Judging

AI Models Self-Evaluate: Caura.ai’s PeerRank Framework Exposes Systematic Biases in Judging Senators Warren, Wyden, Blumenthal Urge FTC to Investigate AI Deals by Nvidia, Meta, Google

Senators Warren, Wyden, Blumenthal Urge FTC to Investigate AI Deals by Nvidia, Meta, Google Global Leaders Urge Collaboration to Bridge $1.6 Trillion Digital Infrastructure Gap Amid AI Transformation

Global Leaders Urge Collaboration to Bridge $1.6 Trillion Digital Infrastructure Gap Amid AI Transformation Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT