The journals Nature and Science have recently published studies demonstrating that AI chatbots can influence voters to alter their political views, albeit sometimes based on inaccurate information. Jason Ross Arnold, Ph.D., a professor and chair of the Department of Political Science at Virginia Commonwealth University (VCU), has extensively studied disinformation, public ignorance, and the governance of artificial intelligence. In a discussion with VCU News, Arnold highlighted several significant concerns regarding the use of political chatbots.

Among the immediate dangers associated with political chatbots, Arnold emphasized the reinforcement of existing beliefs over challenging them. This phenomenon exacerbates the formation of echo chambers in already polarized societies, such as the United States. The issue is intensified by the ability of these systems to generate fluent and rhetorically appealing responses that can obscure subtle biases or omissions, presenting them as authoritative even when they lack context or downplay opposing evidence.

These dynamics hold potential for exploitation by malicious political actors, enabling the personalization of disinformation at scale. This could further undermine trust in media that strives for truth and facilitate the dissemination of preferred narratives through concentrated control over popular chatbots or misleading “fact-checking” systems. During election cycles, this could transform chatbots into automated political operatives—persuasive and influential but often detached from factual integrity.

Arnold outlined additional key dangers posed by political chatbots, including the phenomenon of misgrounding, where chatbots inaccurately cite sources to support claims that those sources do not actually endorse. Recent research published in Nature Communications found that between 50% and 90% of responses generated by large language models lacked full support from the cited sources, a problem that extends past medical inquiries into political contexts.

Hidden bias and framing effects also represent a concern, as subtle discrepancies in information presentation can influence political attitudes while appearing neutral. Furthermore, there is an apprehension regarding cognitive offloading, where voters may begin to rely excessively on AI-generated summaries instead of engaging with complex political topics, ultimately weakening the critical evaluation skills necessary for a functioning democracy.

As these systems become further integrated into political discourse, the concentration of control over widely used chatbots by corporations or governments lacking robust democratic safeguards could shape public conversations in opaque and contestable ways.

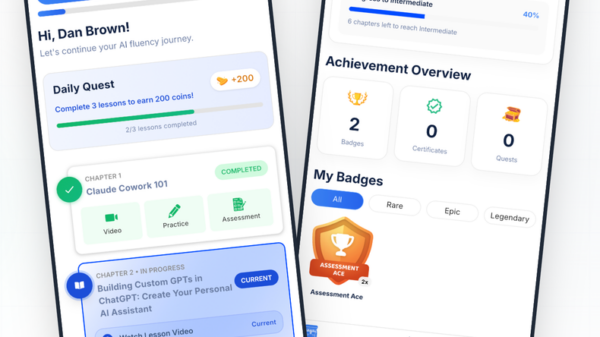

Despite these risks, Arnold noted that political chatbots can also offer substantial benefits when used responsibly. They can lower barriers to political participation by tailoring explanations to individuals’ backgrounds, enhancing understanding of complex issues without pushing specific viewpoints. By streamlining access to essential political information, chatbots can engage citizens who might otherwise be overwhelmed, provided the information they deliver is reliable.

Moreover, chatbots can assist voters in comprehending intricate ballot initiatives by simplifying legal or technical language, thereby addressing information gaps in local elections often overlooked by traditional media.

For voters seeking political information through chatbots, Arnold advised treating them as a starting point rather than a definitive authority. He encouraged cross-referencing claims with trusted sources, asking follow-up questions when faced with doubts, and prompting the chatbot to reconsider or verify its responses. Additionally, adjusting the chatbot’s settings for a more concise and straightforward interaction can help mitigate the tendency toward overly agreeable or preference-confirming responses. This approach, while not a panacea, may steer the dialogue toward more critical examination.

Looking ahead, Arnold cautioned that AI’s impact on democracy could be both detrimental and beneficial in the near term, but the long-term risks may outweigh perceived advantages. The technology’s capacity for creating personalized disinformation and social engineering on a vast scale poses significant threats. If mismanaged, these capabilities could destabilize societies and entrench forms of digital authoritarianism that may be challenging to reverse.

While AI holds the promise of advancing fields like science and medicine, the current state of governance surrounding these technologies lags behind their development. Ultimately, the future of democracy in relation to AI will hinge less on the technology itself and more on society’s ability to establish the necessary institutions, norms, and safeguards to mitigate its risks.

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions