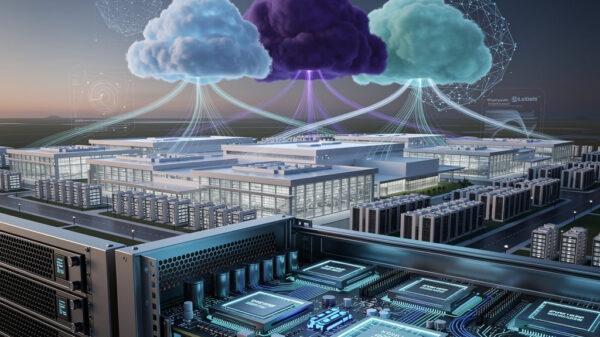

Generative artificial intelligence (AI) has rapidly gained traction in the healthcare sector, driven by expectations that it can tackle various tasks—from clinical documentation to data analysis—while addressing longstanding workforce challenges. As healthcare organizations increasingly adopt internet-connected tools, they face growing difficulties in managing cybersecurity, vulnerable to frequent cyberattacks. AI products themselves may become targets for cybercriminals, who can leverage their own AI technologies to enhance the effectiveness of their attacks. This dual threat is prompting healthcare cybersecurity teams to evolve, according to Taylor Lehmann, director of the office of the chief information security officer at Google Cloud.

In a recent interview with Healthcare Dive, Lehmann elaborated on how generative AI tools are likely to impact healthcare cybersecurity and what organizations should consider to prepare for an AI-enhanced security landscape. He highlighted that as AI systems become more integrated into healthcare, identifying and mitigating potential threats tied to these technologies will be crucial.

“This is where I am concerned about the future,” Lehmann noted. “Detecting when AI is wrong and whether that inaccuracy is due to manipulation by a malicious actor is going to be very hard.” He emphasized that organizations need robust systems in place to ensure that they can trace the origins and training data of AI models. At Google, for instance, they utilize model cards and cryptographic binary signing to maintain transparency around the source and training of AI systems. “That visibility is going to be critical,” he added.

Lehmann’s vision for the future entails a shift in the skills and roles needed within healthcare organizations to effectively manage these emerging risks. He believes that new security disciplines are on the horizon, and Google is actively exploring these roles to create guidance for organizations. “There are ways and methods needed to secure AI systems out of the gate,” he explained, advocating for strong identity controls that can distinguish between users and the models themselves. He pointed out that achieving radical transparency in AI operations is essential for accountability.

One innovative approach is the establishment of AI “red teams,” tasked with attempting to breach AI systems and expose their vulnerabilities. “Organizations deploy a team of people that do nothing but try to make these things break,” Lehmann said. These teams evaluate how well AI models are performing, testing safeguards and ensuring compliance with safety standards. He stressed that as AI governance becomes increasingly important, healthcare organizations will need professionals who possess both technical expertise and an understanding of regulatory contexts.

The emergence of AI-driven cyber threats poses a new set of risks for healthcare systems. Lehmann underscored the importance of managing identities not only for human users but also for devices and AI agents. “Identity is going to be the piece of digital evidence that ties everything together,” he emphasized. “We have to wrangle how organizations provision and manage identities.”

Speed is another critical factor in this evolving landscape. “AI systems move fast. They move faster than humans,” he warned. Cybercriminals utilizing weaponized AI can act at unprecedented speeds, necessitating a rapid response from healthcare organizations. Lehmann advised that institutions must assess their architectures and systems to ensure they can quickly deploy new controls and respond to threats. “Look at how quickly you can detect an event and take action on it,” he urged, advocating for a shift from hours or minutes to seconds in response times.

As the healthcare sector integrates more AI tools, the challenges of cybersecurity will only intensify. Organizations must navigate this complex landscape by enhancing transparency, establishing robust governance, and preparing their workforces for the demands of AI security. As Lehmann aptly pointed out, “If you can’t operate with that same speed to detect and eliminate [threats], you’re going to be in trouble.” The future of healthcare cybersecurity will depend on a proactive approach to these emerging technologies, with organizations needing to adapt swiftly to the evolving threat landscape.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks