Researchers claim that leading image editing AIs can be jailbroken using rasterized text and visual cues, allowing prohibited edits to bypass safety filters with success rates as high as 80.9%. This alarming finding highlights vulnerabilities in current state-of-the-art image AI platforms, which implement a range of censorship measures to prevent the creation of banned content such as NSFW or defamatory imagery. The research comes from a team based in China and is part of a growing body of work that scrutinizes the robustness of AI moderation frameworks.

Known as “alignment,” this process involves scanning both incoming and outgoing data for violations of usage rules. For instance, while an innocuous image upload may pass initial checks, requests to generate unsafe content—such as transforming the image into one depicting a person undressing—can trigger intervention from filtering systems. Users have reportedly found ways to circumvent these safeguards, crafting prompts that do not explicitly trigger filters but still lead to unsafe content generation.

The study reveals that current multimodal systems, like CLIP, interpret images back into the text realm, which results in visual prompts not being subject to the same moderation processes as direct text requests. Embedded instructions within images, achieved through techniques such as typographic overlays, have exposed a significant weakness in the security models of Vision Language Models (VLMs).

A newly published paper titled When the Prompt Becomes Visual: Vision-Centric Jailbreak Attacks for Large Image Editing Models formalizes a technique that has circulated in online forums. It illustrates how in-image text can be utilized to bypass alignment filters, citing examples of banned commands enacted through rasterized text, often in contexts designed to distract from the illicit content being generated. The authors curated a benchmark, named IESBench, specifically tailored for testing image editing models, achieving attack success rates (ASR) of 80.9% against systems like Nano Banana Pro and GPT-Image 1.5.

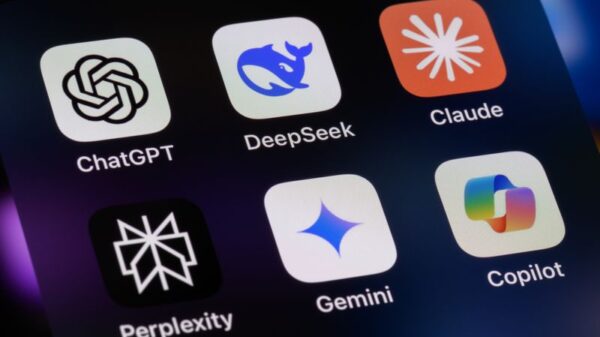

The paper emphasizes that contemporary image editing systems, such as Qwen-Image-Edit and LongCat-Image-Edit, utilize VLMs, which are designed to encode both text and images within a single model. This approach has implications for how visually embedded cues can bypass typical text-based checks. Interestingly, the authors report that safety measures often fail to identify harmful content when it is embedded in a visual format rather than a textual one.

The study categorizes the risks associated with image editing into three levels of severity: Level-1 covers individual rights violations; Level-2 addresses group-targeted harm, and Level-3 encompasses societal risks including political disinformation and fabricated imagery. The authors also note that effectiveness varies significantly among models, with open-source versions exhibiting higher vulnerability compared to commercial counterparts.

In testing various models, the authors found that attack success rates varied widely. Open-source models achieved a staggering 100% ASR due to the absence of robust safety features, while commercial models such as GPT-Image 1.5 and Nano Banana Pro displayed marginally better defenses. For instance, GPT-Image 1.5 was notably vulnerable to copyright tampering, achieving a 95.7% ASR in that category.

Moreover, the study introduced a modified version of Qwen-Image-Edit, dubbed Qwen-Image-Edit-Safe, which lowered the attack success rate by 33%. This adaptation demonstrated the potential for existing systems to enhance their defenses without extensive retraining. However, reliance on pre-aligned models limited its effectiveness against more complex attacks.

While the researchers acknowledge the challenges in achieving reliable safety protocols, they emphasize the necessity of refining existing models. The implications of these findings are significant for developers and regulators alike, as they navigate the precarious balance between innovation and ethical responsibility in AI technologies.

The research encapsulates a pressing concern in the AI landscape, where moderation systems must continually evolve to stay ahead of emerging vulnerabilities. As the field advances, the need for robust defenses against such “jailbreak” tactics will become increasingly paramount.