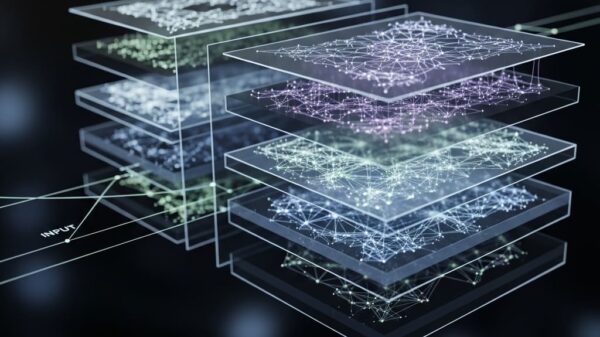

Researchers at the University of California, Berkeley, have unveiled a groundbreaking approach to understanding the intricate inner workings of large language models (LLMs). The team, consisting of Grace Luo, Jiahai Feng, Trevor Darrell, Alec Radford, and Jacob Steinhardt, has developed a novel generative model called the Generative Latent Prior (GLP), which is designed to analyze and manipulate neural network activations. By training diffusion models on a staggering one billion residual stream activations, this research moves the field closer to achieving LLM interpretability without relying on restrictive structural assumptions.

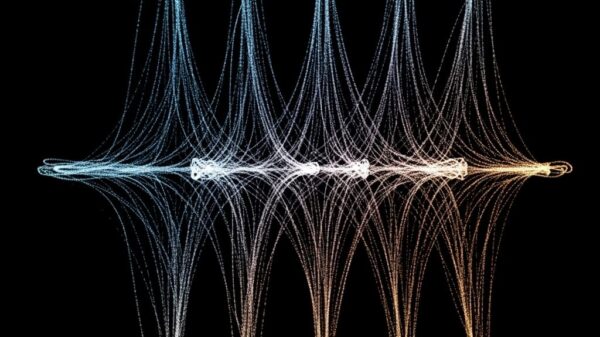

This advancement comes at a time when the need for greater transparency in artificial intelligence systems is more pressing than ever. The GLP model stands out by effectively capturing the distribution of internal states within LLMs, a capability not fully realized by existing methods such as PCA and sparse autoencoders, which often impose potentially limiting frameworks. The findings indicate that as diffusion loss decreases, there is a marked improvement in fluency during steering interventions and a greater conceptual isolation of neurons.

The training of the GLP utilized a deep diffusion model architecture, and the quality of this model was rigorously assessed using metrics like the Frechet Distance and Principal Component Analysis. Results showed that the activations generated by the GLP closely resembled real data, further validating its efficacy. This model not only enhances the fluency of generated text but also preserves semantic content, addressing a significant challenge in existing language model steering techniques.

As researchers applied the GLP to various interpretability tasks—ranging from sentiment control to persona elicitation—they observed substantial improvements in fluency across different benchmarks. Notably, steering interventions that utilized the learned prior from the GLP yielded better performance, with larger gains correlating with reduced diffusion loss. This trend suggests that the model successfully projects manipulated activations back onto the natural activation manifold, avoiding the pitfalls of direct intervention methods, which often degrade quality.

The study also highlights the scaling effects of computational power on model performance. Across models with parameters ranging from 0.5 billion to 3.3 billion, researchers found that diffusion loss exhibited predictable power law scaling with compute. Each 60-fold increase in computational resources halved the distance to the theoretical loss floor, directly translating to improvements in both steering and probing performance. This established diffusion loss as a reliable predictor of downstream utility, indicating that further scaling may yield even greater advancements.

In assessing manifold fidelity, the team utilized innovative post-processing techniques to ensure that steered activations remained true to their semantic roots. The success of GLP was also reflected in the performance of “meta-neurons,” the model’s intermediate features, which displayed superior outcomes in one-dimensional probing tasks compared to traditional sparse autoencoder features and raw language model neurons. The findings support the notion that GLP effectively isolates interpretable concepts into discrete units, enhancing the transparency of LLMs.

The researchers noted some limitations, including the independent modeling of single-token activations and the unconditional nature of the current generative latent prior. Future work may involve exploring multi-token modeling and conditioning on clean activations, along with expanding the approach to different activation types and layers within language models. Potential parallels with image diffusion techniques could also offer insights into identifying unusual or out-of-distribution activations, further bridging advancements from diffusion models into neural network interpretability.

As the demand for transparency in AI systems continues to grow, the GLP model represents a significant leap towards comprehending and controlling the behavior of large language models. With its ability to generate high-quality, interpretable outputs without the constraints of traditional methods, the implications for both research and practical applications in AI are profound. The study underscores the importance of innovative approaches in making AI systems more understandable and trustworthy, and it sets the stage for future explorations in this critical area.