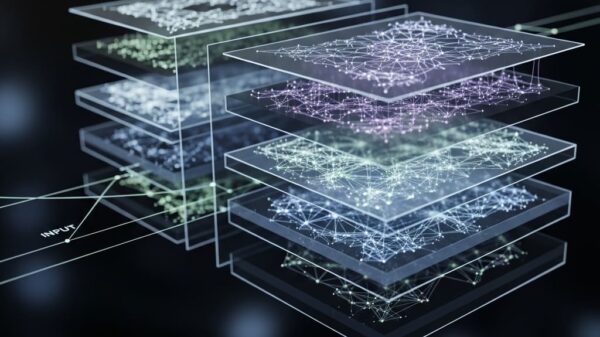

As organizations increasingly incorporate generative AI into their cybersecurity strategies, the technology is proving to be a double-edged sword. While these advanced tools enhance the efficiency of security operations centers (SOCs), they also present unique challenges and risks. This dynamic landscape has prompted industry experts to reassess the role of AI in security, moving beyond initial skepticism towards a more nuanced understanding of its capabilities and limitations.

Generative AI, which employs large language models similar to those behind ChatGPT, is being integrated into security platforms like Microsoft Security Copilot, CrowdStrike Charlotte AI, and others. These tools excel at summarizing logs, translating natural language queries into actionable commands, and drafting incident reports. A key advantage is their ability to significantly streamline alert triage—a process historically burdened with low-value tasks that can overwhelm understaffed teams. For instance, CrowdStrike claims its Charlotte AI can eliminate over 40 hours of manual triage work weekly with a remarkable accuracy rate exceeding 98%.

Despite these advantages, experts caution that AI tools should not be seen as panaceas. They lack the strategic thinking and contextual understanding that human analysts bring to the table. Current generative AI models struggle with novel attack techniques, often excelling only at pattern recognition. As a result, while they can efficiently process vast amounts of data to identify potential threats, they do not reliably detect all forms of cyber aggression.

Challenges and Use Cases

The industry has identified several practical applications for generative AI in cybersecurity. These include threat detection, incident response acceleration, and enhanced phishing detection. Traditional phishing filters often fail to recognize sophisticated scams that bypass standard authentication measures. Generative AI, on the other hand, leverages behavioral analysis to identify anomalies in communication patterns that indicate fraudulent activity.

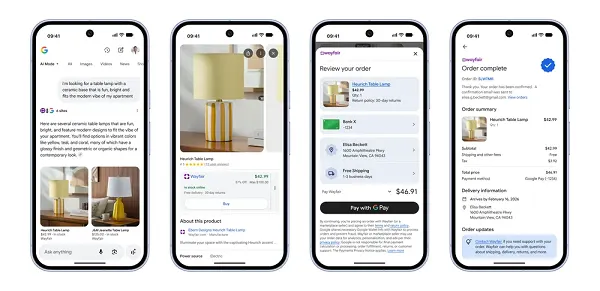

Incident response is another area where generative AI shines. It can rapidly synthesize data from multiple sources, allowing analysts to focus on actionable insights rather than getting bogged down in documentation. Microsoft’s internal research indicates that its Security Copilot can improve analyst speed by 22% and accuracy by 7% during incident workflows. These figures may seem modest, but in the fast-paced environment of cybersecurity incidents, even slight improvements can have substantial implications for minimizing damage.

Nevertheless, the integration of AI tools can be fraught with challenges. Implementation timelines can stretch into weeks or months, particularly when aligning new technologies with existing systems. Moreover, the effectiveness of AI-driven tools often depends on the quality of the data fed into them. If the underlying data is flawed, the insights generated will be unreliable.

Another pressing concern is the potential for cybercriminals to weaponize generative AI. As defenders adopt sophisticated AI technologies, attackers are also leveraging these tools to craft more convincing phishing campaigns and automate the exploitation of vulnerabilities. The arms race between AI-driven defenders and attackers is intensifying, necessitating ongoing vigilance from organizations.

As the cybersecurity landscape evolves, organizations must remain aware of both the benefits and risks associated with generative AI. While it can dramatically enhance operational efficiency, it also necessitates a reevaluation of security strategies to address vulnerabilities introduced by the technology. The future of AI in cybersecurity is likely to be characterized by a growing reliance on automated tools, but human oversight will remain critical to navigate the complexities of modern cyber threats.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks