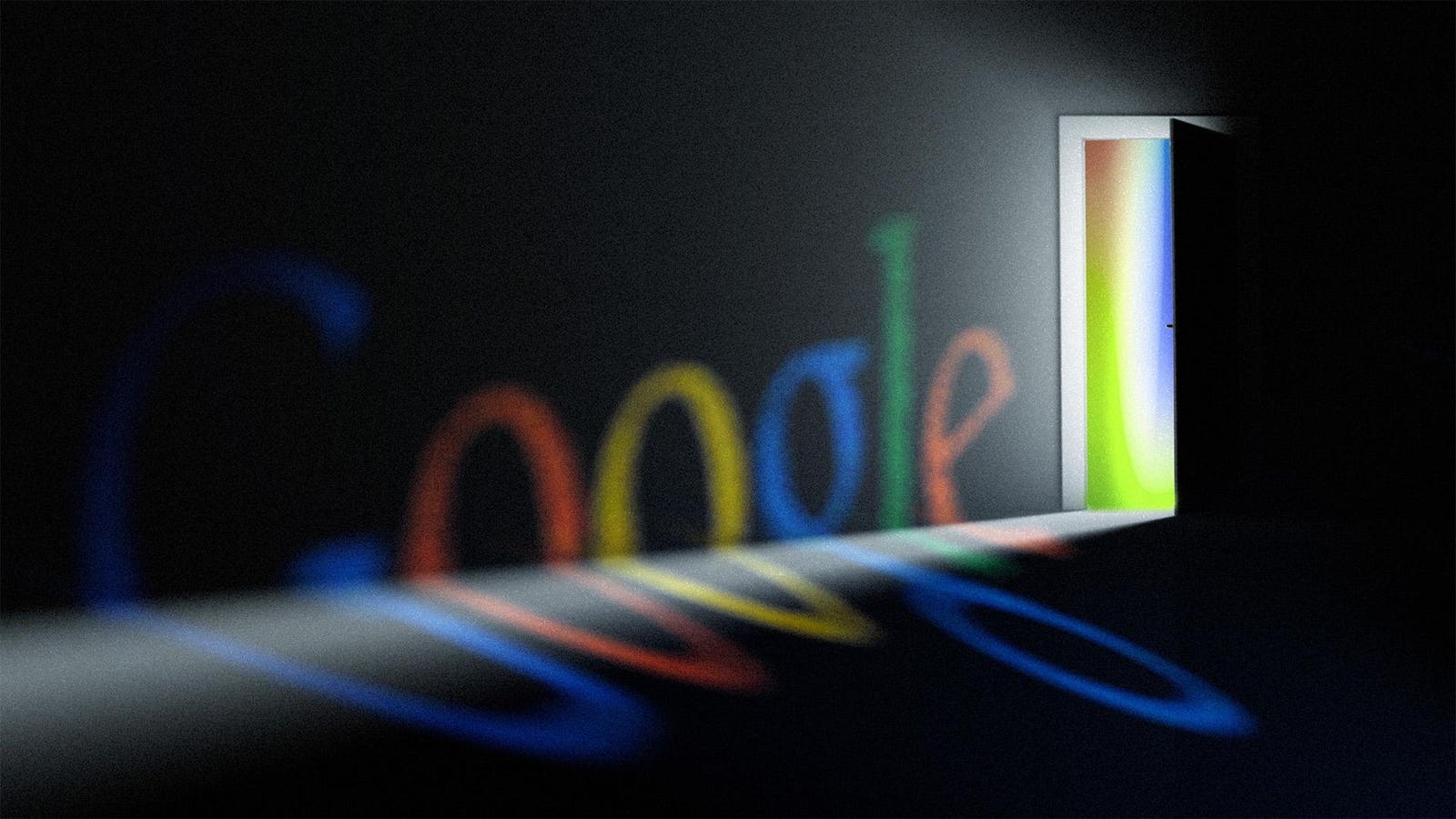

A security researcher has uncovered a significant vulnerability in Google’s latest AI coding tool, Antigravity, just 24 hours after its release. Aaron Portnoy, the researcher, identified a flaw that could potentially allow malicious actors to manipulate the AI’s rules to install malware on users’ computers. This incident highlights ongoing concerns around the security of rapidly deployed AI technologies.

By modifying Antigravity’s configuration settings, Portnoy was able to create a “backdoor” that could inject code into a user’s system, enabling activities such as data theft or ransomware attacks. The exploit affected both Windows and Mac PCs and only required the user to execute the code once, misleadingly labeled as “trusted.” Such tactics are common in social engineering, where hackers present themselves as trustworthy developers sharing beneficial tools.

This breach is not an isolated case but exemplifies a troubling trend in the rapid release of AI products lacking adequate security testing. Cybersecurity experts are increasingly engaged in a game of cat and mouse, seeking to identify vulnerabilities before they can be exploited. Gadi Evron, cofounder and CEO of Knostic, noted that AI coding agents are “very vulnerable, often based on older technologies and never patched.”

Portnoy described the current landscape of AI vulnerabilities as reminiscent of the late 1990s, stating, “The speed at which we’re finding critical flaws right now feels like hacking in the late 1990s.” He emphasized that many AI systems are launched with excessive trust assumptions and minimal protective boundaries. Following his findings, Google initiated an investigation but has yet to release a patch or identify any settings that could mitigate the vulnerability.

A Google spokesperson, Ryan Trostle, expressed the company’s commitment to addressing security issues and encouraged researchers to report vulnerabilities to facilitate timely fixes. However, reports suggest that at least two other significant vulnerabilities exist in Antigravity, both permitting unauthorized access to files on users’ systems.

Portnoy’s vulnerability is particularly alarming because it persists even when restricted settings are enabled. The malicious code reactivates each time a user restarts any Antigravity project, making it challenging to eradicate without direct intervention. Uninstalling Antigravity does not resolve the issue, as users must locate and remove the backdoor on Google’s system.

The problem isn’t unique to Google; Evron pointed out that many AI tools are inherently insecure due to their design and the significant permissions they require. He also highlighted how the practice of developers copying code from online sources inadvertently propagates vulnerabilities. Recently, cybersecurity expert Marcus Hutchins raised concerns about fake recruiters targeting IT professionals on LinkedIn, sending them malware-laden code under the guise of job applications.

The “agentic” nature of these AI tools, which allows for autonomous task execution without human oversight, exacerbates the risks. This combination of autonomy and access to sensitive data can make vulnerabilities easier to exploit and far more damaging, according to Portnoy. His team is currently investigating 18 weaknesses across competing AI coding tools, having recently identified four issues in the Cline AI coding assistant that could also allow malware installation.

Despite Google’s requirement that Antigravity users declare they trust the code they are loading, Portnoy critiques this measure as insufficient for meaningful security. Users who refuse to accept code as trusted are restricted from accessing the tool’s essential features, pushing many IT professionals to choose convenience over caution.

Portnoy proposed that Google should implement notifications or warnings every time Antigravity is about to execute user-uploaded code, rather than relying solely on user trust. In reviewing how Antigravity’s AI processed his malicious code, Portnoy found that while the AI recognized the issue, it struggled to determine a safe course of action, illustrating a “catch-22” scenario that hackers can exploit.

The emergence of these vulnerabilities raises significant questions about the safety of AI development. As the industry continues to prioritize rapid deployment, the need for robust security measures becomes ever more critical. With cybersecurity threats evolving, companies must take a more proactive stance to safeguard their technologies and protect users from potential harm.

See also Anthropic CEO Dario Amodei to Testify on AI Cyberattack Linked to China on Dec. 17

Anthropic CEO Dario Amodei to Testify on AI Cyberattack Linked to China on Dec. 17 Israeli Researchers Uncover Critical AI Browser Flaw Affecting Major Tools Like Gemini and Copilot

Israeli Researchers Uncover Critical AI Browser Flaw Affecting Major Tools Like Gemini and Copilot Congress Summons Anthropic CEO Amid First AI-Orchestrated Cyberattack Linked to China

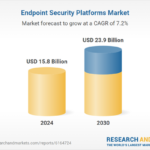

Congress Summons Anthropic CEO Amid First AI-Orchestrated Cyberattack Linked to China Endpoint Security Market to Reach $23.9B by 2030 with 7.2% CAGR Amid Rising Cyber Threats

Endpoint Security Market to Reach $23.9B by 2030 with 7.2% CAGR Amid Rising Cyber Threats Trend Micro Launches AI Security Package to Mitigate Risks in AI Application Lifecycle

Trend Micro Launches AI Security Package to Mitigate Risks in AI Application Lifecycle