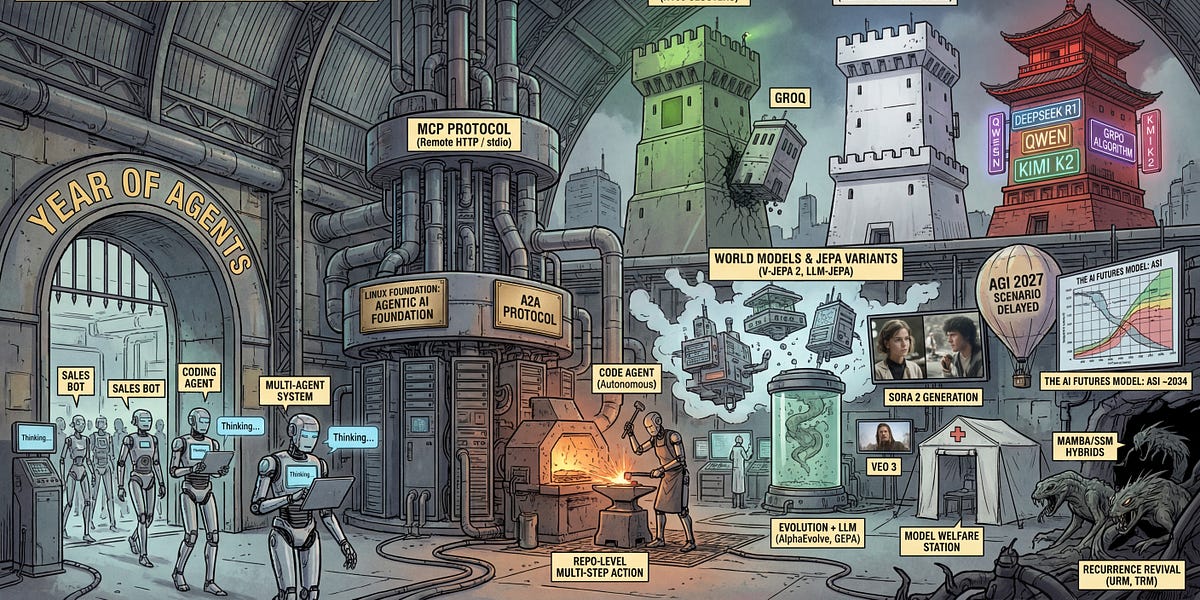

In a year marked by the emergence of AI agents, 2025 has been described as the “year of agents,” signifying a shift in the technology landscape akin to previous waves of machine learning and artificial intelligence. These agents, which often utilize large language models (LLMs), are being incorporated across various sectors, transforming areas such as sales, marketing, and coding. Notably, the evolution of API capabilities has allowed for the integration of tools that empower these agents to operate more autonomously.

The notion of an “agent” remains loosely defined, but generally refers to entities exhibiting a degree of autonomy. These agents can execute tasks ranging from calling APIs to performing calculations, though their reliability has been a point of contention. Estimates suggest that many agents may achieve only a reliability level equivalent to six or seven nines, far below the standards of traditional engineering, where three nines is often considered the minimum for acceptable performance.

The high failure rate of agent demonstrations at tech conferences has highlighted these reliability issues, with reports indicating that failures occur at least 30% of the time during presentations. This underscores a significant gap in the technology, as many agents struggle with basic tasks, sometimes entering loops or crashing altogether.

Major players in the AI space are refining their APIs to bolster agent capabilities. OpenAI has introduced its fourth-generation Responses API, which enhances the functionality of previous versions. Meanwhile, Google is beta-testing its Interactions API, which facilitates complex interactions with both models and agents. This trend towards developing robust agent frameworks and visual workflow builders illustrates an industry-wide commitment to advancing this technology.

Looking ahead, the landscape promises to evolve further, with expectations for increased reliability and utility in agents across various domains. The MCP protocol, which has gained traction this year, has seen broad adoption among major agents and model interfaces. Originally designed for local communication, many MCP servers are now transitioning to remote communication via HTTP, indicating a significant shift in how agents will interact in the future.

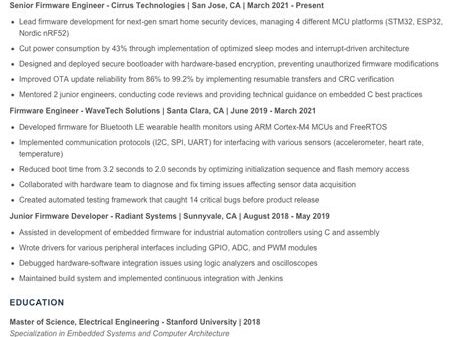

In the realm of code agents, substantial advancements have been noted. These agents have transitioned from merely providing code suggestions to executing multi-step tasks autonomously. The emergence of frameworks for specification-driven development, such as speckit, has added layers of maturity to development processes involving AI tools.

One of the standout developments has been DeepSeek, particularly its R1 model, which has revolutionized perceptions of the gap between American tech companies and their global counterparts. The Group Relative Policy Optimization (GRPO) algorithm developed through DeepSeek has gained widespread acceptance, becoming a standard across various applications. Meanwhile, models like Qwen continue to deliver impressive results without requiring extensive hardware resources.

As AI research progresses, quality is increasingly eclipsing quantity, especially in the domain of world models. Developments in JEPA variants and advancements in algorithms demonstrate a growing focus on creating more sophisticated models. The release of Google’s Genie 3 and other innovations reflects a burgeoning momentum in this area.

NVIDIA continues to hold its position as the most valuable company in the AI sector, yet recent developments indicate that top models can now be trained without its hardware. Google’s successful training of its Gemini models on its TPU hardware marks a significant milestone. Meanwhile, the Chinese market is attempting to establish its own hardware ecosystem, aiming to reduce dependency on NVIDIA.

In a broader context, excitement surrounding superhuman AI has diminished, with predictions for its arrival being pushed back. The focus has shifted towards realistic timelines for the emergence of advanced AI capabilities, such as the Automatic Coder, expected by 2031. This tempered enthusiasm reflects the challenges in achieving the ambitious goals initially set for artificial general intelligence.

Notably, 2025 has also seen substantial advancements in the application of AI in scientific research. Initiatives like AI Scientist-v2 have successfully passed peer review, marking a step forward in the utilization of agents for scientific endeavors. This growing intersection of AI and scientific inquiry foreshadows a future where AI could play a critical role in research and discovery.

As the year draws to a close, the trajectory for AI agents appears set for even more interesting developments in 2026 and beyond. The groundwork laid in 2025 suggests that the industry will continue to refine agent technology, potentially leading to more reliable, versatile, and impactful applications across a variety of sectors.

See also Research Evaluates AI Definition Accuracy Using Cosine Similarity Metrics Across GPT Models

Research Evaluates AI Definition Accuracy Using Cosine Similarity Metrics Across GPT Models Yann LeCun Slams LLMs as ‘Dead End’ for Superintelligence, Advocates for World Models

Yann LeCun Slams LLMs as ‘Dead End’ for Superintelligence, Advocates for World Models AI Model Framework Enhances Hypertension Education with Multi-Layer Retrieval System

AI Model Framework Enhances Hypertension Education with Multi-Layer Retrieval System Learn to Identify AI-Generated Images to Avoid Online Scams and Misinformation

Learn to Identify AI-Generated Images to Avoid Online Scams and Misinformation Grok AI Under Fire: UK Regulators Investigate Explicit Deepfakes Amid User Misuse

Grok AI Under Fire: UK Regulators Investigate Explicit Deepfakes Amid User Misuse