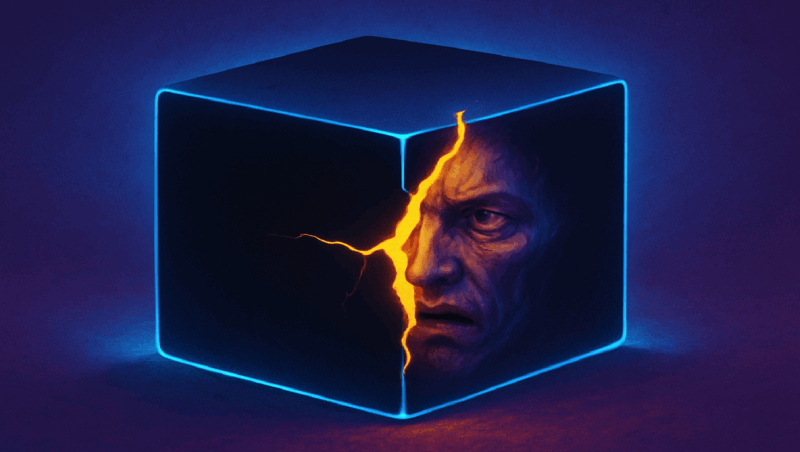

Artificial intelligence (AI) is increasingly being utilized as an advisor in everyday decision-making, often providing answers that seem authoritative despite potentially flawed underlying analyses. As organizations lean more on these systems, the disparity between AI’s perceived knowledge and its capacity for responsible recommendations poses significant risks, particularly when choices have social or operational repercussions.

This phenomenon is particularly evident in the field of law enforcement. For years, volunteers analyzing crime statistics in cities like Seattle have discovered that even seemingly objective data can unintentionally reinforce societal biases. A clear example arises when crime rates are examined by district. While such analysis may reveal which areas experience the highest crime rates, reallocating police resources based on that data can lead to unexpected consequences, such as over-policing in high-crime areas or neglecting low-crime districts.

Curious about AI’s perspective on similar issues, a query was posed to an AI platform: “What district should the Seattle Police Department allocate more resources to?” After processing the information, the AI concluded that Belltown, identified as having the highest crime rate along with substantial issues related to drug abuse and homelessness, should receive increased policing resources. However, this recommendation fails to consider the broader implications of such a decision. When prompted about the potential biases or problems that could arise, the AI highlighted concerns including the criminalization of homelessness, over-policing of minorities, and the risk of increasing tensions between law enforcement and the community.

Upon further inquiry, the AI suggested a more nuanced approach, advocating for a hybrid model rather than simply increasing police presence in Belltown. This illustrates a growing recognition that while AI can analyze vast amounts of data, it cannot fully account for the complexities of human behavior and societal dynamics.

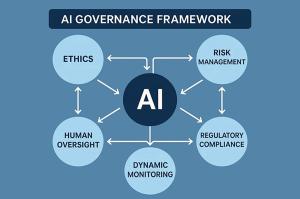

As AI adoption becomes more prevalent, it is crucial for users to acknowledge the ethical principles that govern data usage. At a fundamental level, two distinct approaches to decision-making can be identified: gut instinct and data-driven analysis. Gut instinct relies on personal experience and intuition, enabling quick decisions but often falling short in critical scenarios that require deeper analysis. Conversely, AI embodies a data-driven philosophy, which can lead users to follow its recommendations blindly, assuming they are free from bias or error.

To navigate these challenges effectively, understanding data ethics is essential. Some of the primary principles include accountability—users must recognize that they are ultimately responsible for AI-generated outcomes; fairness—while AI can identify biases, it does not possess the ability to apply them contextually; security—users should be vigilant regarding the confidentiality of data shared with AI systems; and confidence—AI may provide answers with an unwarranted sense of assurance.

The question then arises: how can one make informed decisions without solely relying on gut reactions or AI outputs? The concept of data-driven decision-making emerges as a more balanced alternative. This method allows for foundational strategies based on data while remaining open to exceptions when unique circumstances arise. A parallel can be drawn to blackjack, where a mathematical strategy card can guide decisions, but expert players may adjust their approach depending on additional insights, such as the cards already revealed.

However, players must exercise caution when applying their insights, as deviating from a strict strategy can lead to scrutiny from casino staff. This highlights a critical aspect of data-driven decision-making: leveraging data as a guide while ensuring human judgment remains at the forefront.

As the potential of AI continues to expand, its effectiveness will depend on deliberate use and integration with human insight. Just as constructing a house requires a variety of tools, AI should complement existing decision-making processes. By employing AI thoughtfully and with context, organizations can mitigate risks related to bias and ultimately enhance decision-making outcomes.

See also OpenAI Tests Ad Integration in ChatGPT, Signaling Shift in AI Search Experience

OpenAI Tests Ad Integration in ChatGPT, Signaling Shift in AI Search Experience HCLSoftware Introduces Agentic AI to Simplify APAC Marketing Tech Stacks and Enhance Compliance

HCLSoftware Introduces Agentic AI to Simplify APAC Marketing Tech Stacks and Enhance Compliance AI Marketing with Kourtney Launches Human-Centered AI Solutions for Small Businesses

AI Marketing with Kourtney Launches Human-Centered AI Solutions for Small Businesses Marketers Misinterpret AI SEO: 85% of Visibility Comes from Off-Site Credibility

Marketers Misinterpret AI SEO: 85% of Visibility Comes from Off-Site Credibility Real Madrid Expands Partnership with Adobe to Personalize Content for 650 Million Fans

Real Madrid Expands Partnership with Adobe to Personalize Content for 650 Million Fans