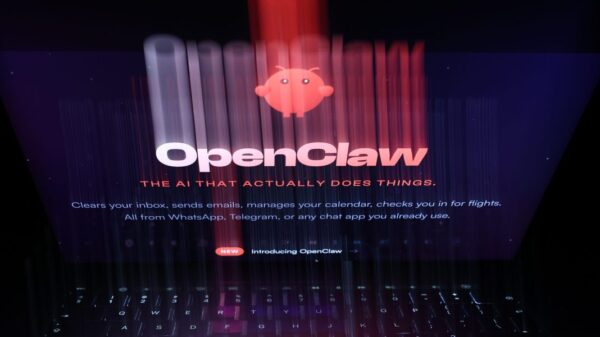

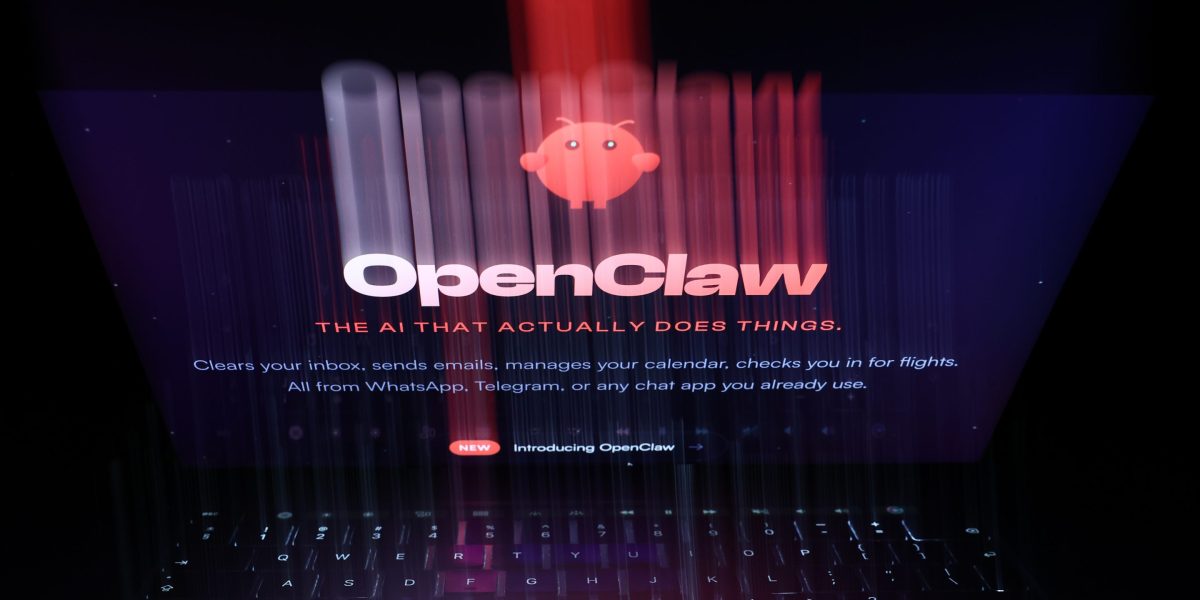

In a rapidly evolving AI landscape, the release of OpenClaw has sparked significant interest as well as concern among industry experts. Developed by Peter Steinberger, this free, open-source autonomous AI agent allows users to customize its capabilities, enabling it to interact with various applications and perform tasks such as sending emails and making restaurant reservations. This unprecedented level of autonomy has garnered a devoted following, but it also raises serious security issues that could have far-reaching implications.

OpenClaw’s ability to act independently presents both exciting opportunities and considerable risks. Cybersecurity experts warn that this flexibility can lead to unintended consequences, such as data leakage and unauthorized actions, often exacerbated by user misconfigurations. Ben Seri, co-founder and CTO at Zafran Security, emphasized the lack of safeguards surrounding OpenClaw, stating, “The only rule is that it has no rules.” While this approach attracts users interested in pushing the boundaries of AI, it also creates a fertile ground for potential security breaches.

Colin Shea-Blymyer, a research fellow at Georgetown’s Center for Security and Emerging Technology, echoed these concerns, describing the classic risks associated with AI systems and the implications of “skills”—the plugins that give OpenClaw its functionality. Unlike traditional applications, OpenClaw autonomously decides when and how to use these skills. Shea-Blymyer posed a poignant question: “Imagine using it to access the reservation page for a restaurant and it also having access to your calendar with all sorts of personal information.” The complexities surrounding this autonomy heighten security vulnerabilities, making it crucial for users to understand the implications of their configurations.

Despite the potential for misuse, Shea-Blymyer acknowledged that OpenClaw’s emergence at the hobbyist level offers an opportunity for learning. He stated, “We will learn a lot about the ecosystem before anybody tries it at an enterprise level.” This environment allows for experimentation that could inform how enterprise systems might eventually incorporate similar autonomous functionalities. However, both experts agree that enterprise adoption will be slow, primarily due to the inherent risks associated with a system that lacks control mechanisms.

As the excitement around OpenClaw grows, so too does the scrutiny of its security implications. Users intrigued by OpenClaw’s capabilities are advised to adopt a cautious approach. Shea-Blymyer warned, “Unless someone wants to be the subject of security research, the average user might want to stay away from OpenClaw.” The potential for an AI agent to inadvertently cause harm underscores the need for careful consideration and responsible experimentation.

In parallel developments, Anthropic, a notable player in the AI sector, has announced a $20 million contribution to a super PAC aimed at promoting stronger AI safety regulations. This move sets it in direct opposition to OpenAI, which is backing candidates less inclined to emphasize these regulations. As the debate over AI governance intensifies, these initiatives highlight a growing divide within the industry on how to balance innovation with safety.

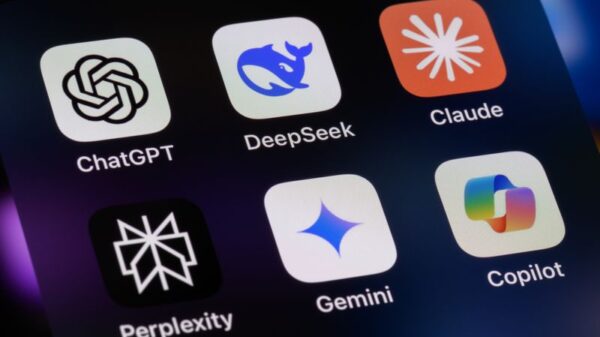

In other news, OpenAI has introduced its first model designed for rapid output, named GPT-5.3-Codex-Spark. This model leverages technology from Cerebras to deliver ultra-low-latency, real-time coding, a significant step forward in the practical applications of AI in software development. OpenAI’s focus on speed reflects a broader trend toward enhancing AI interactivity, particularly as these agents take on more autonomous roles.

Amid these developments, Anthropic has also committed to absorbing rising electricity costs at its AI data centers, asserting that this responsibility will not be passed onto consumers. This initiative is part of a broader strategy to ensure that the costs associated with building AI infrastructure do not burden everyday ratepayers, fostering a more sustainable approach to energy consumption.

Lastly, Isomorphic Labs, affiliated with Alphabet and DeepMind, has unveiled a new drug design engine that claims to outstrip previous models in predicting biological interactions. This advancement could accelerate drug discovery and optimize how pharmaceutical research tackles complex challenges, marking a significant leap forward in computational medicine.

As the AI landscape continues to evolve, the intersection of innovation and security will remain crucial. While platforms like OpenClaw offer exciting possibilities, they also serve as a reminder of the responsibilities that come with unprecedented technological power.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health