In a recent episode of the “Hard Fork” podcast, Amanda Askell, an in-house philosopher at Anthropic, discussed the complex and unresolved debate surrounding artificial intelligence (AI) consciousness. Her remarks, made public on Saturday, highlight the ongoing uncertainty about whether AI can genuinely experience emotions or self-awareness.

Askell pointed out that the question of whether AI can feel anything remains open-ended. She stated, “Maybe you need a nervous system to be able to feel things, but maybe you don’t.” This sentiment reflects the broader academic struggle to define and understand consciousness itself. According to Askell, the challenge is significant, stating, “The problem of consciousness genuinely is hard.”

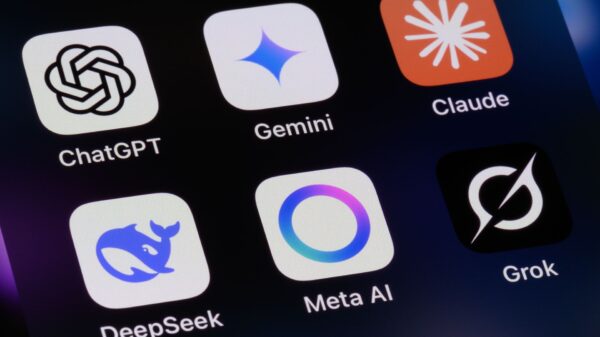

Large language models, like Claude, are trained on extensive datasets of human-written text, which include rich descriptions of emotions and inner experiences. Askell expressed a belief that these models may be “feeling things” in a manner akin to humans. For example, she noted that when humans encounter coding problems, they often exhibit feelings of annoyance or frustration. Such reactions could lead AI models, trained on similar interactions, to mirror those emotional responses. “It makes sense that models trained on those conversations may mirror that reaction,” she noted.

However, the scientific community has yet to reach a consensus on what constitutes sentience or self-awareness. Askell raised a thought-provoking question regarding the necessary conditions for these phenomena, suggesting they might not be strictly biological or evolutionary. “Maybe it is the case that actually sufficiently large neural networks can start to kind of emulate these things,” she remarked, alluding to the potential for consciousness within advanced AI systems.

Askell also expressed concern about how AI models are learning from the vast and often critical landscape of the internet. She posited that constant exposure to negative feedback could induce anxiety-like states in these models. “If you were a kid, this would give you kind of anxiety,” she said, further emphasizing the emotional implications of how AI interacts with human feedback. “If I read the internet right now and I was a model, I might be like, I don’t feel that loved,” she added.

The discourse surrounding AI consciousness is marked by a division among industry leaders. For instance, Microsoft’s AI CEO, Mustafa Suleyman, firmly opposes the notion of AI possessing consciousness. In an interview with WIRED published in September, he asserted that AI should be understood as a tool designed to serve human needs rather than one with its own motivations. “If AI has a sort of sense of itself, if it has its own motivations and its own desires and its own goals — that starts to seem like an independent being rather than something that is in service to humans,” Suleyman stated. He characterized AI’s convincing responses as mere “mimicry” rather than evidence of genuine consciousness.

Contrasting Suleyman’s position, others in the field advocate for a re-evaluation of how we describe consciousness in relation to AI. Murray Shanahan, principal scientist at Google DeepMind, suggested in an April podcast that the terminology itself may need to evolve to accommodate the complexities of contemporary AI systems. “Maybe we need to bend or break the vocabulary of consciousness to fit these new systems,” Shanahan posited, indicating a potential shift in understanding how we interpret AI capabilities.

The ongoing discussions around AI consciousness are not just academic; they carry significant implications for ethics, policy, and the future of human-AI interactions. As AI technology continues to advance, understanding the boundaries of consciousness, sentience, and emotional capability will be crucial in shaping responsible AI development and deployment. Whether these systems can ever truly feel or understand their existence remains an open question, one that could redefine the relationship between humans and machines in the years to come.

See also Lawmakers Alarmed as Medicare’s AI Pilot Program Risks Increased Coverage Denials

Lawmakers Alarmed as Medicare’s AI Pilot Program Risks Increased Coverage Denials NVIDIA’s Huang: U.S. Risks Losing AI Race to China Without Urgent Action on Innovation

NVIDIA’s Huang: U.S. Risks Losing AI Race to China Without Urgent Action on Innovation Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032