OpenAI has unveiled its latest coding model, Codex-Spark, which boasts a processing speed of 1,000 tokens per second. While this figure is noteworthy, it is considered modest compared to the company’s previous benchmarks. Cerebras Systems reported speeds of 2,100 tokens per second on the Llama 3.1 70B model and up to 3,000 tokens per second on OpenAI’s own gpt-oss-120B model. The comparatively lower speed of Codex-Spark suggests the complexities associated with larger models.

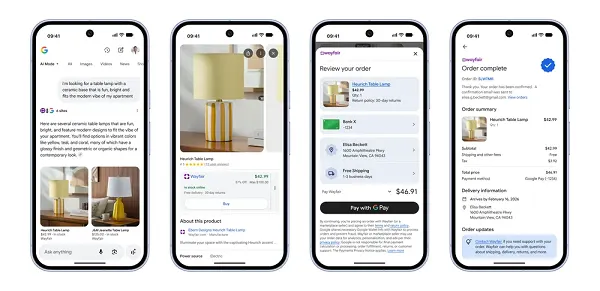

This year has marked a significant advancement for AI coding agents, with tools like OpenAI’s Codex and Anthropic’s Claude Code demonstrating enhanced capabilities for developing prototypes and boilerplate code efficiently. In a rapidly evolving tech landscape, latency has emerged as a critical differentiator, with faster coding models enabling developers to iterate more swiftly. The competitive atmosphere has pushed OpenAI and its rivals, including Anthropic and Google, to expedite their development cycles.

OpenAI’s Codex line has seen two rapid iterations in recent months: GPT-5.2 was released in December 2025 after CEO Sam Altman issued a “code red” memo in response to mounting competitive pressure from Google, and the latest iteration, GPT-5.3-Codex, was launched just days ago.

The infrastructure underpinning Codex-Spark is significant not only for its performance metrics but also for its hardware implications. The model operates on Cerebras’ Wafer Scale Engine 3, a chip that is notably large and has been central to Cerebras’ business strategy since 2022. The partnership between OpenAI and Cerebras was formalized in January, with Codex-Spark being the inaugural product of this collaboration.

In a calculated move to diversify its technology sources, OpenAI has been reducing its reliance on Nvidia over the past year. Key developments include a substantial multi-year agreement with AMD signed in October 2025, a $38 billion cloud computing deal with Amazon announced in November, and the design of a proprietary AI chip slated for fabrication by TSMC. Although OpenAI had initially sought a $100 billion infrastructure deal with Nvidia, this has yet to materialize, even as Nvidia has committed to a $20 billion investment.

Reports indicate that OpenAI has grown dissatisfied with the performance speed of certain Nvidia chips, particularly for inference tasks—precisely the type of workload that Codex-Spark is intended to address. In the competitive landscape of AI development, the importance of speed cannot be overstated, even if it may come with trade-offs in accuracy. For developers who rely on AI suggestions while coding, a processing speed of 1,000 tokens per second could feel more like a chainsaw than a precision tool, underscoring the necessity for caution in usage.

As AI coding tools continue to evolve, the interplay between speed and complexity will likely shape the future of software development. Companies like OpenAI and Cerebras are poised to play pivotal roles in this transformation, as they seek to refine their models and enhance their hardware capabilities in an increasingly competitive market.

See also Seize the Moment: Buy Nvidia and Micron Now as AI Demand Soars Amid Supply Constraints

Seize the Moment: Buy Nvidia and Micron Now as AI Demand Soars Amid Supply Constraints Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032 Satya Nadella Supports OpenAI’s $100B Revenue Goal, Highlights AI Funding Needs

Satya Nadella Supports OpenAI’s $100B Revenue Goal, Highlights AI Funding Needs