South Korea has initiated a comprehensive regulatory framework for artificial intelligence (AI), marking a significant step that could serve as a model for other nations. The AI Basic Act, which took effect on Thursday, comes amid increasing global concern regarding the implications of AI-generated content and automated decision-making, as governments struggle to keep pace with rapid technological advancements.

The legislation mandates companies to label AI-generated content, a requirement that has drawn criticism from both tech startups and civil society groups. Startups argue that the regulations are overly restrictive, while advocates for user rights claim the rules do not go far enough to protect individuals from potential harms associated with AI technologies.

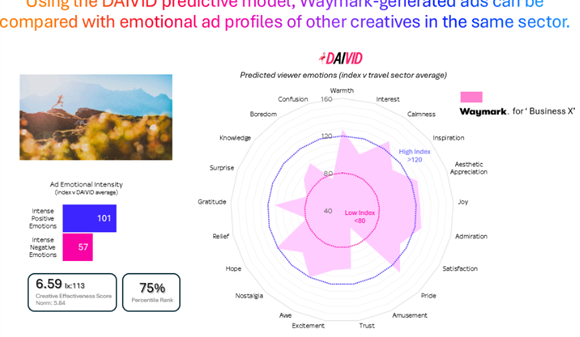

Under the new law, firms providing AI services are required to implement several measures. These include adding invisible digital watermarks to clearly artificial outputs, such as cartoons and artwork. For realistic deepfake content, visible labels are also necessary. Additionally, operators of what is termed “high-impact AI,” which encompasses systems used for medical diagnoses, hiring processes, and loan approvals, must conduct risk assessments and document their decision-making processes. However, if a human makes the final decision, the system may not fall under this classification.

Moreover, extremely powerful AI models must submit safety reports, although the threshold set for compliance is so elevated that government officials acknowledge no current models worldwide meet this requirement. Violations of these rules can result in fines of up to 30 million won (approximately £15,000), but officials have promised a grace period of at least one year before penalties are enforced.

Dubbed the “world’s first” legislation of its kind to be fully enforced, this act aligns with South Korea’s ambition to establish itself as one of the top three global AI powers, alongside the United States and China. Government representatives assert that the law is primarily focused on promoting industry growth rather than imposing restrictive measures.

Commenting on the legislation, Alice Oh, a computer science professor at the Korea Advanced Institute of Science and Technology (KAIST), noted that while the law is not without its flaws, it is designed to evolve without stifling innovation. Nonetheless, a survey conducted by the Startup Alliance revealed that an overwhelming 98% of AI startups are unprepared for compliance, leading to widespread frustration among the entrepreneurial community.

The process by which companies self-determine if their systems qualify as high-impact AI has been criticized for being lengthy and fraught with uncertainty. Additionally, there are concerns about competitive imbalance; while all Korean companies are subject to regulation, only foreign firms that meet certain thresholds—such as Google and OpenAI—are required to comply.

This regulatory push occurs against a backdrop of serious domestic issues. A report by Security Hero, a US-based identity protection firm, indicated that South Korea accounts for 53% of all global deepfake pornography victims. The situation intensified in August 2024, when investigations uncovered extensive networks on Telegram distributing AI-generated sexual imagery of women and girls, underscoring the urgent need for robust regulation.

Although the law’s origins date back to July 2020, prior to this crisis, it faced delays due to accusations that it prioritized industry interests over citizen protections. Civil society organizations argue that the law offers limited safeguarding for individuals affected by AI systems. In a joint statement, four groups, including Minbyun, a collective of human rights lawyers, expressed concerns that the legislation primarily protects institutions rather than citizens.

The country’s human rights commission has also criticized the enforcement decree for lacking clear definitions of high-impact AI, leaving those most likely to suffer from rights violations unprotected. In response, the Ministry of Science and ICT stated that it expects the new law to “remove legal uncertainty” and foster a “healthy and safe domestic AI ecosystem,” assuring that it will continue to clarify the rules through revised guidelines.

Experts indicate that South Korea’s approach contrasts with strategies adopted by other regions. Unlike the European Union’s strict risk-based model, the sector-specific and market-driven strategies of the United States and the United Kingdom, or China’s blend of state-led industrial policy and detailed service regulation, South Korea has opted for a more flexible, principles-based framework. Melissa Hyesun Yoon, a law professor at Hanyang University, described this approach as a “trust-based promotion and regulation,” suggesting that Korea’s framework may serve as a reference point in global AI governance discussions.

See also Elon Musk’s AI-Generated Sydney Sweeney Video Sparks Controversy Over Grok Imagine Tool

Elon Musk’s AI-Generated Sydney Sweeney Video Sparks Controversy Over Grok Imagine Tool Deezer and Sacem Unveil AI Tool, Eliminating 85% of Fraudulent Music in Royalty Pool

Deezer and Sacem Unveil AI Tool, Eliminating 85% of Fraudulent Music in Royalty Pool Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032