XPENG has announced a significant advancement in autonomous driving research, with a collaborative paper developed alongside Peking University accepted at the Association for the Advancement of Artificial Intelligence (AAAI) conference scheduled for 2026. The research introduces a novel visual token pruning framework aimed at enhancing the efficiency of end-to-end autonomous driving systems, all while ensuring high accuracy in complex driving environments.

The paper, titled “FastDriveVLA: Efficient End-to-End Driving via Plug-and-Play Reconstruction-based Token Pruning,” distinguished itself in a highly competitive field, with AAAI 2026 receiving a total of 23,680 submissions. Of these, only 4,167 were accepted, yielding an acceptance rate of 17.6 percent. This recognition underscores the technical depth and originality of contributions within the global artificial intelligence research community.

This collaborative effort merges XPENG’s applied automotive AI expertise with the academic rigor of Peking University, reflecting a broader industry trend towards deeper investment in foundational AI research. Automakers are increasingly focused on overcoming the scalability, cost, and real-time performance challenges that continue to hinder the deployment of advanced autonomous driving technologies.

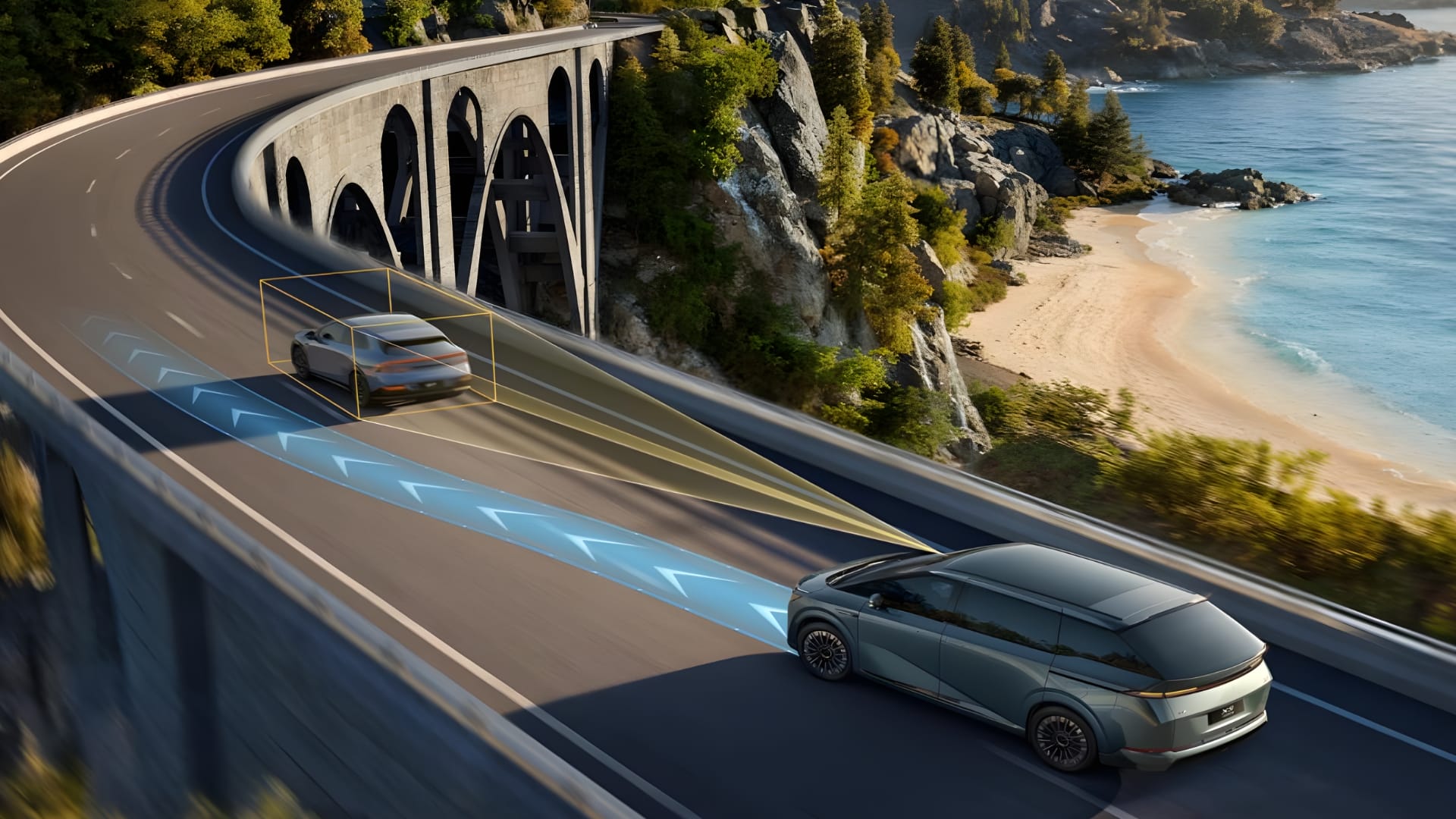

At the heart of this research is the FastDriveVLA framework, specifically designed for Vision-Language-Action models employed in end-to-end autonomous driving. These models process images into a vast number of visual tokens, which are crucial for how the system interprets its surroundings and formulates driving actions. However, this high volume of tokens significantly escalates the computational demands onboard vehicles, impacting inference speed and real-time responsiveness.

FastDriveVLA addresses this challenge by allowing the AI to concentrate solely on essential visual information, akin to how human drivers prioritize critical foreground elements such as lanes, vehicles, and pedestrians while ignoring non-essential background details. By filtering out irrelevant visual data, the framework significantly lowers computational demands without sacrificing the quality of driving decisions.

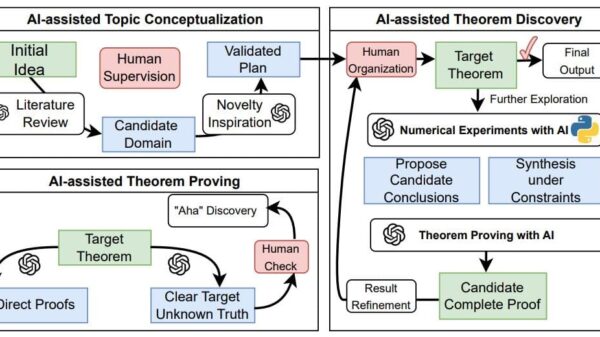

Unlike existing token pruning methods that rely on text-visual attention or token similarity, FastDriveVLA employs a reconstruction-based approach. It introduces an adversarial foreground-background reconstruction strategy that enhances the model’s capability to identify and retain high-value tokens. This innovation facilitates strong scene understanding while promoting operational efficiency.

The framework underwent evaluation using the nuScenes autonomous driving benchmark, achieving state-of-the-art performance across various pruning ratios. When visual tokens were reduced from 3,249 to 812, FastDriveVLA demonstrated an impressive nearly 7.5 times reduction in computational load while maintaining high planning accuracy. These results highlight the framework’s potential to enable more efficient deployment of advanced autonomous driving models in real-world applications.

This marks the second occasion in 2026 that XPENG has been acknowledged at a leading global AI conference. Earlier in the year, the company was the sole Chinese automaker invited to present at the CVPR Workshop on Autonomous Driving, where it shared advancements regarding autonomous driving foundation models. Additionally, at its Tech Day event in November, XPENG unveiled its VLA 2.0 architecture, which eliminates the language translation step from traditional Vision-Language-Action pipelines, thereby enabling direct visual-to-action generation.

XPENG emphasized that these developments reflect its comprehensive in-house capabilities, encompassing model architecture design, training, distillation, and vehicle deployment. Looking forward, the company reaffirmed its commitment to achieving Level 4 autonomous driving, pledging continued investment in large-scale AI models that aim to accelerate the integration of physical AI systems into vehicles and deliver safer, more efficient, and more comfortable intelligent driving experiences globally.

For more information, visit XPENG and Peking University.

See also EngineersMind Reports 200% Client Growth, Unveils AI-Driven Expansion Plans for 2026

EngineersMind Reports 200% Client Growth, Unveils AI-Driven Expansion Plans for 2026 Boston Dynamics Integrates DeepMind AI into Atlas Robot for Enhanced Industrial Tasks

Boston Dynamics Integrates DeepMind AI into Atlas Robot for Enhanced Industrial Tasks J.P. Morgan Launches Special Advisory Unit to Navigate AI, Cybersecurity, and Geopolitical Risks

J.P. Morgan Launches Special Advisory Unit to Navigate AI, Cybersecurity, and Geopolitical Risks Elon Musk’s Grok AI Bot Faces Global Outcry for Generating Non-Consensual Sexualized Images

Elon Musk’s Grok AI Bot Faces Global Outcry for Generating Non-Consensual Sexualized Images NVIDIA Acquires Groq for $20B, Secures Key AI Talent and Technology Amid Market Shift

NVIDIA Acquires Groq for $20B, Secures Key AI Talent and Technology Amid Market Shift