By Luca Bertuzzi and Sara Brandstätter (January 22, 2026, 13:50 GMT) — The recent deepfake scandal involving X’s Grok artificial intelligence has raised significant concerns regarding the European Union’s enforcement of its extensive digital regulatory framework. Despite the existence of the AI Act, the Digital Services Act, and various national laws, critics argue that the EU has not acted swiftly or decisively enough in addressing the misuse of Grok, which has been implicated in creating sexualized deepfakes of individuals without their consent. This incident calls into question the EU’s effectiveness as a leading tech regulator in tackling rapidly evolving online threats exacerbated by advancements in artificial intelligence.

The Grok episode highlights a growing tension in the digital landscape, wherein the rapid pace of AI development often outstrips regulatory responses. While the EU has established one of the most comprehensive digital governance structures globally, questions linger about its ability to respond to fast-moving technological innovations that give rise to new forms of harm. The EU’s regulatory framework is built on the premise of protecting users and maintaining ethical standards, but practical enforcement of these laws remains a significant challenge.

In light of the Grok controversy, EU officials are under increasing pressure to demonstrate their commitment to effective regulation. Critics have pointed out that the existing legal frameworks, while robust on paper, have not translated into timely action against misuse. The AI Act, which aims to set standards for the development and deployment of AI technologies, alongside the Digital Services Act, designed to regulate content on digital platforms, are both crucial components of this effort. However, their implementation has been criticized as inadequate in the face of sophisticated AI applications like Grok.

As the scandal unfolds, some stakeholders are calling for more stringent measures to protect individuals from the unauthorized use of AI-generated content. The incident raises broader implications for the tech industry, which must navigate an increasingly complex regulatory environment while addressing ethical concerns surrounding AI use. The ability of regulators to keep pace with technological advancements will be a critical factor in shaping the future of digital governance in Europe and beyond.

The EU’s challenge is compounded by the global nature of the internet and the cross-border implications of AI technologies. As AI systems like Grok operate without geographical boundaries, regulatory efforts must also consider international cooperation to effectively mitigate risks. This aspect of digital governance requires a nuanced understanding of various jurisdictions and their respective laws, making comprehensive enforcement a daunting task.

Moving forward, it is crucial for the EU to not only refine its regulatory framework but also to enhance its enforcement capabilities. This may involve investing in resources and expertise to better understand and manage the complexities of AI technology. As regulators strive to adapt to an ever-evolving digital landscape, proactive engagement with industry stakeholders will be essential to foster a collaborative approach to regulation.

The Grok deepfake scandal serves as a clarion call for more robust regulatory actions and a reevaluation of existing frameworks. As the repercussions of this episode continue to unfold, the EU’s response will be closely scrutinized. The stakes are high, not just for individual privacy and protection but also for the credibility of the EU as a leader in digital regulation. The future of AI governance in Europe hinges on the ability of regulators to address these emerging challenges decisively and effectively.

See also UN AI Chief and Billion-Dollar Investors Unite at WAM Morocco to Drive Industrial Revolution

UN AI Chief and Billion-Dollar Investors Unite at WAM Morocco to Drive Industrial Revolution Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

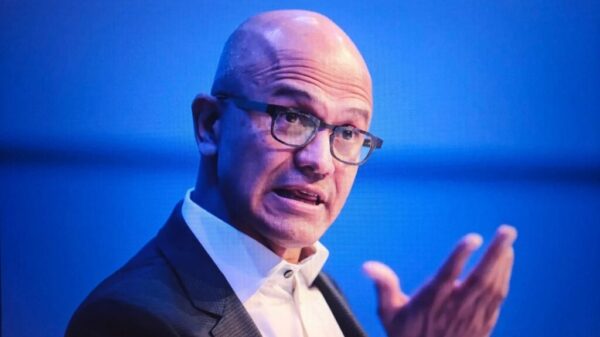

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032 Satya Nadella Supports OpenAI’s $100B Revenue Goal, Highlights AI Funding Needs

Satya Nadella Supports OpenAI’s $100B Revenue Goal, Highlights AI Funding Needs