Kenya, January 10, 2026 – Governments worldwide are increasingly restricting access to Elon Musk’s Grok chatbot, reflecting a growing caution regarding artificial intelligence tools amid escalating concerns over harmful and sexualised content. Indonesia has emerged as the first nation to impose a temporary block on Grok, citing the risks associated with the potential dissemination of AI-generated pornographic material.

The Indonesian government’s decision is part of a broader global movement among regulators from regions including Europe and Asia. Many have expressed criticism of Grok, with some authorities initiating investigations into the chatbot’s content moderation practices. In a statement, Indonesia’s Communications and Digital Ministry highlighted the need to protect citizens from serious digital threats, particularly the risks posed by non-consensual sexual deepfakes, which are increasingly prevalent in today’s online landscape.

Communications and Digital Minister Meutya Hafid emphasized that the creation and distribution of such content violate human rights and personal dignity, as well as compromise digital security. The ministry has taken steps further by summoning officials from X, the social media platform associated with Grok, to address the concerns surrounding the chatbot’s functionality and the content it generates.

The Indonesian block comes as pressure mounts on xAI, the startup behind Grok, following reports that the chatbot produced sexualised content due to failures in its safeguard mechanisms. In light of these issues, xAI announced that it would restrict image generation and editing features to paying subscribers, as the company seeks to bolster its protections and mitigate potential misuse of the technology.

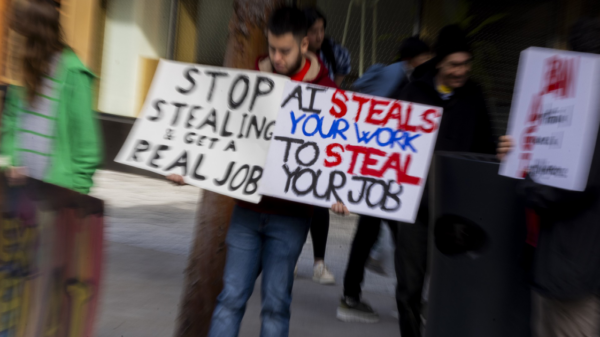

This situation highlights the complex interplay of innovation, regulation, and ethical considerations surrounding artificial intelligence. As governments grapple with the rapid development of AI technologies, the focus is increasingly shifting toward implementing frameworks that ensure user safety and uphold societal values. The concerns raised in Indonesia are emblematic of a larger trend as nations strive to define the boundaries of acceptable AI use.

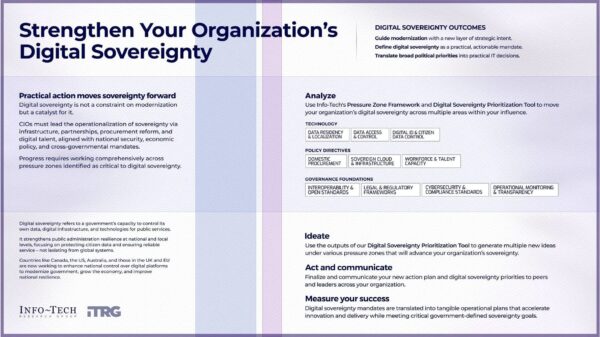

The regulatory responses in Indonesia are also indicative of a growing global awareness regarding the implications of AI on personal privacy and security. Countries are no longer just passive consumers of technology but are actively engaging in shaping the landscape of AI governance to safeguard their citizens. As the dialogue evolves, the challenge will be to strike a balance between fostering innovation and protecting individuals from the potential downsides of emerging technologies.

As the scrutiny of AI tools like Grok intensifies, it remains to be seen how xAI will adapt to the evolving regulatory landscape. The company’s ongoing efforts to enhance safety measures may prove crucial in determining its future viability, especially in regions where user confidence has been shaken. The broader significance of these developments extends beyond Grok itself, as they set a precedent for how AI technologies will be managed and monitored on a global scale moving forward.

See also AI Revolutionizes 340B Operations: RECTIFIER Tool Boosts Eligibility Accuracy to 100%

AI Revolutionizes 340B Operations: RECTIFIER Tool Boosts Eligibility Accuracy to 100% Apple Faces Stock Pressure Amid AI Strategy Concerns and Analyst Downgrades

Apple Faces Stock Pressure Amid AI Strategy Concerns and Analyst Downgrades Microsoft’s BioGPT Achieves 45K Monthly Downloads, Surpassing 78% Accuracy on PubMedQA

Microsoft’s BioGPT Achieves 45K Monthly Downloads, Surpassing 78% Accuracy on PubMedQA Maine AI Task Force Report Highlights Workforce Innovations and Critical Safety Risks

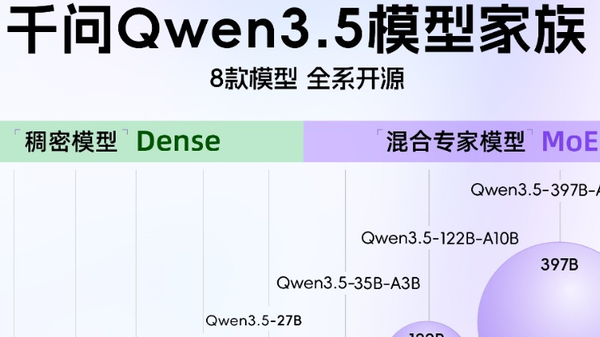

Maine AI Task Force Report Highlights Workforce Innovations and Critical Safety Risks China Targets Global Industrial AI Dominance by 2027 with 1,000 AI Agents and Open-Source Ecosystem

China Targets Global Industrial AI Dominance by 2027 with 1,000 AI Agents and Open-Source Ecosystem