OneTrust released its fifth annual predictions report on January 24, 2026, forecasting that governance infrastructure will be pivotal in distinguishing scalable AI deployments from unsuccessful pilots. This insight emerges as regulatory frameworks, particularly the EU AI Act, are set to enter enforcement phases, with obligations for general-purpose AI models taking effect in August 2025 and full enforcement commencing in August 2026. The report highlights that 90% of advanced AI adopters identified fundamental limitations in siloed governance processes, contrasting with 63% of organizations still in experimental stages.

The report’s analysis associates current AI regulatory hurdles with the 1990s internet governance debates, where legal experts debated whether a new legal framework was necessary or whether existing laws could be adapted. Andrew Clearwater, a partner at Dentons, remarked, “The present debate over whether we need a ‘law of AI’ or whether we can adapt AI into established legal fields mirrors the decades-old cyberspace debate almost point for point.”

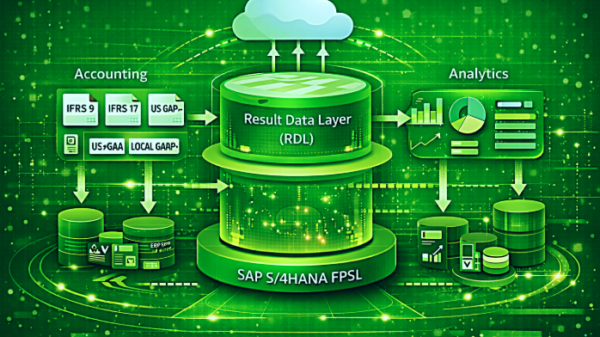

The EU AI Act, which becomes enforceable starting in February 2025 for prohibited practices, exemplifies this adaptive approach. As the regulatory landscape evolves, organizations are under increasing pressure to demonstrate effective oversight capabilities, yet data governance gaps continue to undermine AI confidence across various sectors. OneTrust’s research indicates that 70% of technology leaders believe their governance capabilities cannot keep pace with AI initiatives. Ojas Rege, general manager of privacy and data governance at OneTrust, stated, “Governance-by-design is essential because AI scales both good and harm instantly and offers no easy rollback when things go wrong.”

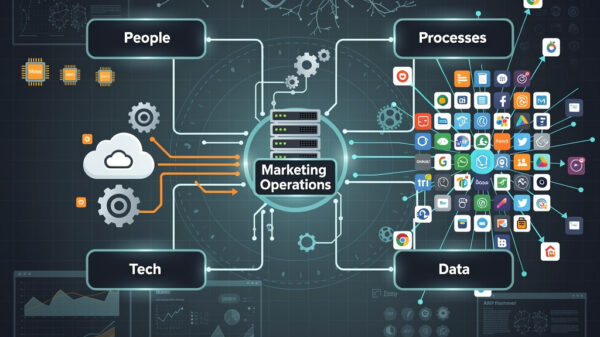

The second prediction in the report focuses on the risks associated with third-party AI vendors, which are significantly altering organizational risk profiles. As technology vendors integrate AI capabilities into enterprise operations, the nature of risk assessment is shifting. Previously, risk analysis was primarily security-focused, but now it demands rapid evaluation of security standards, data privacy implications, and specific AI-related risks. OneTrust’s data shows that 82% of technology leaders report that AI risks are accelerating their governance modernization timelines.

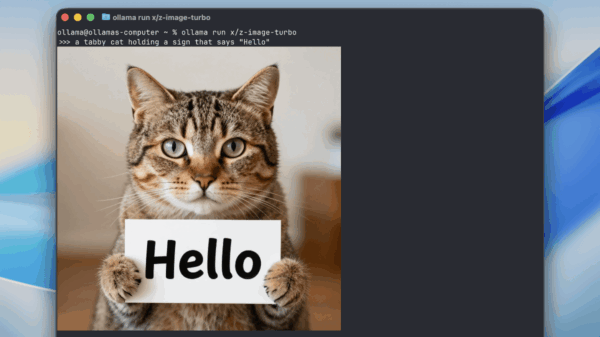

The report also highlights how AI agents capable of autonomous actions will necessitate a shift in governance frameworks from observation to orchestration. Traditional governance systems, designed for predefined processes, struggle to keep up with autonomous reasoning. As these agents interact without human prompts, the potential for compliance gaps increases. The report suggests that organizations can establish new standards that embed context and consent into AI interactions, ensuring that governance is maintained even as AI scales.

Accountability is another crucial theme, with a shift from developers to deployers of AI systems. Governments are moving towards lighter regulation for creators while increasing scrutiny on companies that utilize AI in practical applications such as hiring and lending. “The regulation of AI is less about new law and more about new accountability under existing laws,” Clearwater explained. The challenge for organizations is to operationalize governance effectively, embedding it into workflows rather than treating it as an afterthought.

The closing prediction emphasizes the importance of speed and discipline in governance. The report notes that many organizations still regard governance as an obstacle rather than a facilitator. Kabir Barday, CEO of OneTrust, stated, “Governance is shifting from managing risk to enabling innovation.” As businesses hurry to leverage AI capabilities, the gap between ambition and accountability widens. Without effective governance, growth may ultimately be compromised by eroded trust and missed opportunities.

OneTrust’s report also details the global regulatory landscape, noting over 3,200 regulatory updates in 2025, with many focused on AI. The EU AI Act has entered into force, with provisions phased in through 2027. In the United States, multiple states have introduced legislation addressing AI-related issues. Countries such as South Korea and Brazil are advancing their own regulatory frameworks, with South Korea’s AI Basic Act set to be implemented in January 2026.

The report concludes with insights from industry leaders advocating for collaboration between innovators and regulators. Adrián González Sanchez from Microsoft remarked on the convergence of ethics, responsible AI, and regulation as a powerful lever for governance. The overarching sentiment is that 2026 will be a pivotal year for AI governance, with organizations facing the dual challenge of accelerating innovation while upholding ethical standards and regulatory compliance.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health