California’s legal profession is on the brink of a major regulatory overhaul as state lawmakers advance legislation imposing stringent verification requirements on attorneys utilizing artificial intelligence (AI) tools. The California Senate’s approval of the bill on Thursday signifies a pivotal moment in balancing technological innovation with professional accountability in one of the nation’s foremost legal markets.

The legislation mandates that lawyers verify the accuracy of all materials generated by generative AI systems before presenting them in court or sharing them with clients. This move addresses a surge in incidents where AI-generated legal documents have featured fabricated case citations, erroneous legal analysis, and entirely fictitious judicial precedents, raising concerns among practitioners and diminishing trust in the legal system.

The proposed regulations emerge amid growing awareness that while generative AI tools can revolutionize legal research and document drafting, they pose significant risks without proper oversight. Attorneys across the country have faced sanctions for submitting briefs containing AI-generated inaccuracies—instances where large language models confidently assert false information as fact. These troubling incidents have triggered urgent discussions within bar associations, law firms, and regulatory bodies regarding the establishment of necessary safeguards for AI use in legal practices.

High-profile failures in courtrooms have spurred this legislative response. One notorious case involved attorneys submitting court filings that cited non-existent cases fabricated by their AI research assistant. The fictional precedents, complete with detailed names and docket numbers, crumbled under scrutiny when opposing counsel attempted to find the cited authorities. Such embarrassments have not been limited to California; courts in New York, Texas, and other jurisdictions have issued sanctions against lawyers who neglected to verify AI-generated content, with judges expressing alarm at some practitioners’ apparent willingness to outsource their professional judgment to algorithms.

The California bill extends the verification requirement to client communications and internal work products, recognizing that AI-related risks permeate all aspects of legal practice. Attorneys will be personally responsible for ensuring that AI-generated research reflects existing law, that cited cases are authentic, and that legal analysis meets professional standards of competence and diligence.

The legal industry’s reaction to the proposed regulations is notably divided. Major law firms and legal technology companies worry that overly prescriptive rules could hinder innovation and disadvantage California attorneys compared to their counterparts in less regulated states. Some industry representatives argue existing ethical obligations already require lawyers to supervise their work adequately, rendering additional statutory mandates unnecessary.

Conversely, consumer advocacy groups and legal ethics scholars largely welcome the legislation as a vital safeguard against the reckless deployment of AI systems in high-stakes legal matters. They argue that the rapid advancement of technology has outpaced the legal profession’s ability to establish effective self-regulatory mechanisms, creating an accountability gap that only legislative action can fill. Supporters emphasize that clients, facing inherent information asymmetry, deserve statutory protections they cannot negotiate independently.

Bar associations find themselves in a middle ground in this debate, acknowledging AI tools’ potential to improve efficiency and access to legal services while asserting the irreplaceable role of human judgment in legal analysis. Several state bars have issued advisory opinions on AI use; however, these lack enforcement mechanisms and vary substantially in their recommendations, contributing to confusion among practitioners regarding acceptable practices.

Implementing California’s verification mandate poses significant technical and operational challenges for legal professionals. Unlike traditional research methods, where attorneys can trace their analysis back to primary sources, AI-generated content often emerges from opaque processes, complicating the auditing of specific outputs. Attorneys will need to confirm not just the existence of cited cases but also the accuracy of AI characterizations, which may require nearly as much time as conducting original research, potentially negating the efficiency gains that motivate AI adoption.

To address these challenges, legal technology vendors are developing tools designed for verification, including AI systems capable of checking other AI outputs against authoritative legal databases. However, these meta-verification tools raise their own reliability questions and may merely shift the fundamental issue of ensuring accuracy. Some commentators speculate that the verification requirement might inadvertently drive the development of more transparent and auditable AI systems as legal technology firms compete to offer compliant products.

California’s AI regulation bears significant implications for the delivery of legal services and access to justice. Proponents of legal technology innovation argue that AI has the potential to democratize legal assistance by reducing costs and enabling lawyers to serve more clients effectively. Document automation and legal research powered by AI could make routine legal services more affordable for middle-class individuals and small businesses currently priced out of the market. However, the verification mandate could limit these benefits by imposing high labor requirements for AI-assisted work, potentially preserving existing economic barriers to legal services.

Supporters contend that quality and accuracy must take precedence over efficiency, especially in legal matters where errors can have serious repercussions for clients. They argue that any access-to-justice improvements arising from AI adoption could be illusory if the technology produces unreliable work products that jeopardize clients’ interests. This perspective underscores that meaningful access requires not only lower-cost services but also competent representation.

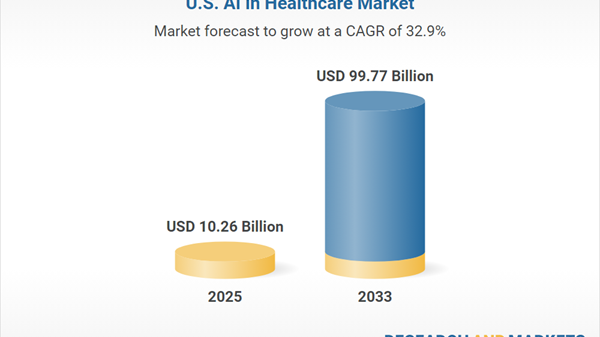

California’s legislative action fits within a broader trend of regulatory experimentation as states grapple with AI governance issues. While several states have introduced rules for AI use in specific sectors like healthcare and finance, comprehensive regulation of AI in professional services remains underdeveloped. California’s approach may serve as a model for other states, prompting competing regulatory frameworks reflecting varying balances between innovation and risk management.

The interstate implications of California’s verification mandate warrant careful consideration, given the national scope of legal practice. Large law firms often handle cases across multiple jurisdictions, and if California enforces verification standards significantly stricter than those in other states, firms may face difficult choices regarding consistency in practices that could increase compliance costs. Meanwhile, federal agencies are beginning to explore AI governance frameworks, though the pace of action at the national level remains uncertain.

As California moves forward with its AI verification mandate, it raises important questions about the evolving relationship between human expertise and machine intelligence in legal work. The legislation prioritizes caution and accountability over a rapid embrace of potentially unreliable technology, affirming a vision where attorneys maintain ultimate responsibility for work products while leveraging AI as a tool subject to rigorous oversight. Whether this model will remain viable as AI capabilities advance remains a critical discussion for the legal profession.

See also Google Urges Court to Reject Publishers’ Attempt to ‘Hijack’ AI Copyright Case

Google Urges Court to Reject Publishers’ Attempt to ‘Hijack’ AI Copyright Case OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case