The cybersecurity landscape is experiencing a seismic shift as artificial intelligence (AI) evolves into both a weapon and a shield. As 2026 progresses, organizations are contending with adversaries who utilize AI to automate reconnaissance, develop intricate social engineering attacks, and exploit vulnerabilities at unprecedented speeds. This acceleration in cyber warfare compels defenders to deploy advanced AI-powered countermeasures or risk being outmaneuvered.

According to Forvis Mazars, the dynamics of cyber threats have transformed significantly; attackers can now execute operations that once required teams of skilled hackers working for extended periods. This shift has compressed incident response times from days to mere hours or even minutes, creating a challenging atmosphere where human-only security operations centers struggle to keep pace with the growing volume and sophistication of threats.

The integration of AI into offensive operations has democratized advanced attack techniques, allowing less experienced threat actors to effectively compete. Capabilities that previously required substantial resources and expertise are now available to criminal organizations and even individual hackers, thereby reshaping the threat landscape that security teams must navigate.

Modern attackers have advanced beyond simple automation. They now deploy AI systems capable of learning from past failures and adapting their strategies in real-time. These systems analyze vast amounts of publicly available data to identify potential targets, create personalized phishing campaigns that evade conventional detection methods, and even engage in multi-turn conversations convincingly impersonating trusted entities. The emergence of AI-generated deepfakes has reached a critical point, where voice cloning and video manipulation can deceive both humans and many automated verification systems. Security researchers have identified instances where attackers used AI-generated voice calls to impersonate executives and authorize fraudulent wire transfers worth millions of dollars. The widespread availability of this technology through commercial APIs and open-source models has lowered the barriers to entry, making such sophisticated attacks accessible to various threat actors.

In response to the escalating threat landscape, organizations are increasingly adopting what Forvis Mazars refers to as “responsible AI defense.” This approach emphasizes balancing aggressive threat detection with ethical considerations regarding privacy, bias, and transparency. Responsible AI defense acknowledges that deploying AI defensively comes with its own risks, including algorithmic bias in threat assessment, privacy violations due to excessive monitoring, and the potential creation of fragile systems that may fail under novel attack patterns.

Organizations embracing responsible AI strategies are establishing governance frameworks to ensure human oversight of critical security decisions, even when AI provides recommendations. These frameworks often include regular audits of AI model performance, assessments for bias in threat detection algorithms, and clear escalation protocols when automated systems face ambiguous situations that require human judgment. This holistic approach extends beyond technical controls to encompass organizational culture, emphasizing the documentation of reasoning behind AI-driven security decisions and the need for explainability in their models.

However, many organizations face significant integration challenges as they attempt to deploy AI-powered defenses amid aging legacy infrastructure. Numerous critical systems were designed prior to the advent of cloud computing and AI-driven threats, creating vulnerabilities that attackers are eager to exploit. Security teams must protect these outdated systems while simultaneously implementing cutting-edge AI tools that often necessitate modern data architectures and API integrations.

The technical debt accumulated from years of piecemeal security solutions has resulted in complex environments where the introduction of new AI tools can yield unexpected consequences. Organizations report investing substantial resources merely to map their existing security infrastructure before planning for AI integration, often uncovering shadow IT deployments and forgotten systems that present notable vulnerabilities.

While AI offers the potential to augment human capabilities and address the ongoing shortage of cybersecurity professionals, organizations are discovering that effective AI defense necessitates a new breed of security expert—those who possess a blend of traditional security knowledge and an understanding of machine learning and AI vulnerabilities. This hybrid skill set is rare and costly, exacerbating the existing talent shortage. Security operations centers are reshaping their focus toward human-AI collaboration models, where analysts are tasked with strategic threat hunting and incident investigation, rather than merely routine monitoring. This transition requires significant investment in training and recruiting personnel with interdisciplinary expertise.

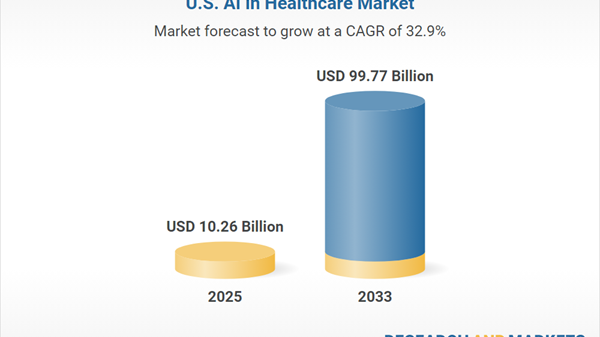

As regulatory frameworks surrounding AI continue to evolve, organizations must navigate a complex landscape of compliance requirements. The emergence of diverse regulations, such as the EU’s AI Act and various state-level initiatives in the United States, creates compliance challenges for organizations operating in multiple jurisdictions. Security teams now need to consider not just the technical effectiveness of AI-powered defenses but also their adherence to evolving legal standards regarding algorithmic transparency and data protection, particularly in sensitive sectors like healthcare and financial services.

Implementing comprehensive AI-powered security measures necessitates substantial capital investment in infrastructure and expertise. Organizations are tasked with weighing these expenditures against the potential costs of cyberattacks, leading to a complicated risk assessment that varies significantly across industries. Mid-sized organizations, in particular, face heightened challenges due to their limited resources compared to larger enterprises.

Despite the complexities, the market for cloud-based AI security services has emerged as a viable solution, enabling organizations to access advanced capabilities without the burden of massive upfront investments. However, these services come with their own set of concerns around data sovereignty and vendor lock-in. The rapid fragmentation of the AI security solutions market complicates vendor selection, presenting organizations with a high-stakes decision-making process.

The ongoing evolution of AI in cybersecurity indicates that the dynamic will not stabilize anytime soon. As defensive AI systems grow more sophisticated, attackers are developing adversarial machine learning techniques aimed at evading these defenses. This cat-and-mouse dynamic is accelerating, with the time between the introduction of new defensive strategies and offensive countermeasures shrinking significantly.

Organizations that will thrive in this environment will be those that embrace continuous learning and adaptation, viewing AI security as a long-term program that requires ongoing refinement. The framework of responsible AI defense provides a foundation for this approach, emphasizing the importance of human judgment and ethical considerations as automation increasingly handles security operations. As 2026 advances, the organizations that effectively balance AI capabilities with human oversight and ethical responsibility will shape the future of enterprise cybersecurity.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks