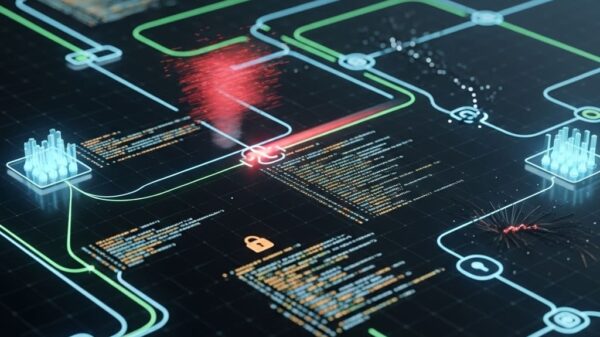

A global logistics company recently faced a significant setback after deploying a sophisticated reinforcement learning model intended to optimize shipping routes. While simulations indicated a promising 12% reduction in fuel costs, real-world implementation resulted in catastrophic failures, leading to substantial financial losses. The root cause was not a flaw in the model itself but rather a mundane data pipeline error; a critical API supplying port congestion data had a 48-hour latency that the model was unable to account for. This oversight directed container ships into historic storms and congested harbors, costing millions in damages.

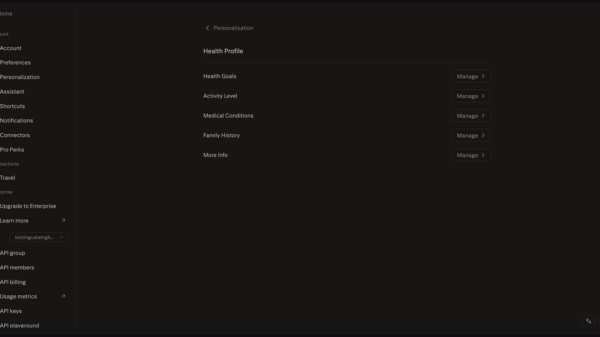

This incident underscores a broader trend observed in 2026: around 70% of delays in artificial intelligence (AI) projects are now attributed to data pipeline and operational integration issues rather than model performance. According to the Fivetran Report 2025, access to foundational models is widespread, yet the gap between a promising prototype and a reliable production system has never been wider. The shift from reliance on better algorithms to developing a robust Assurance Stack—a layered infrastructure of validation, compliance, and resilience—has become essential for organizations aiming to operationalize AI at scale.

For years, the focus in AI development has predominantly centered on the models themselves. However, an AI system’s reliability is fundamentally linked to its weakest link, which often lies within the data pipeline—either in the data being ingested or the actions being executed based on AI insights. Whether it’s validating the natural language processing capabilities of a voice assistant amid real-world noise or ensuring the accuracy of transactions in a financial risk platform, the need for systemic assurance is critical. This Assurance Stack is emerging as a new source of competitive advantage, distinguishing companies that merely experiment with AI from those that successfully deploy it in operational environments.

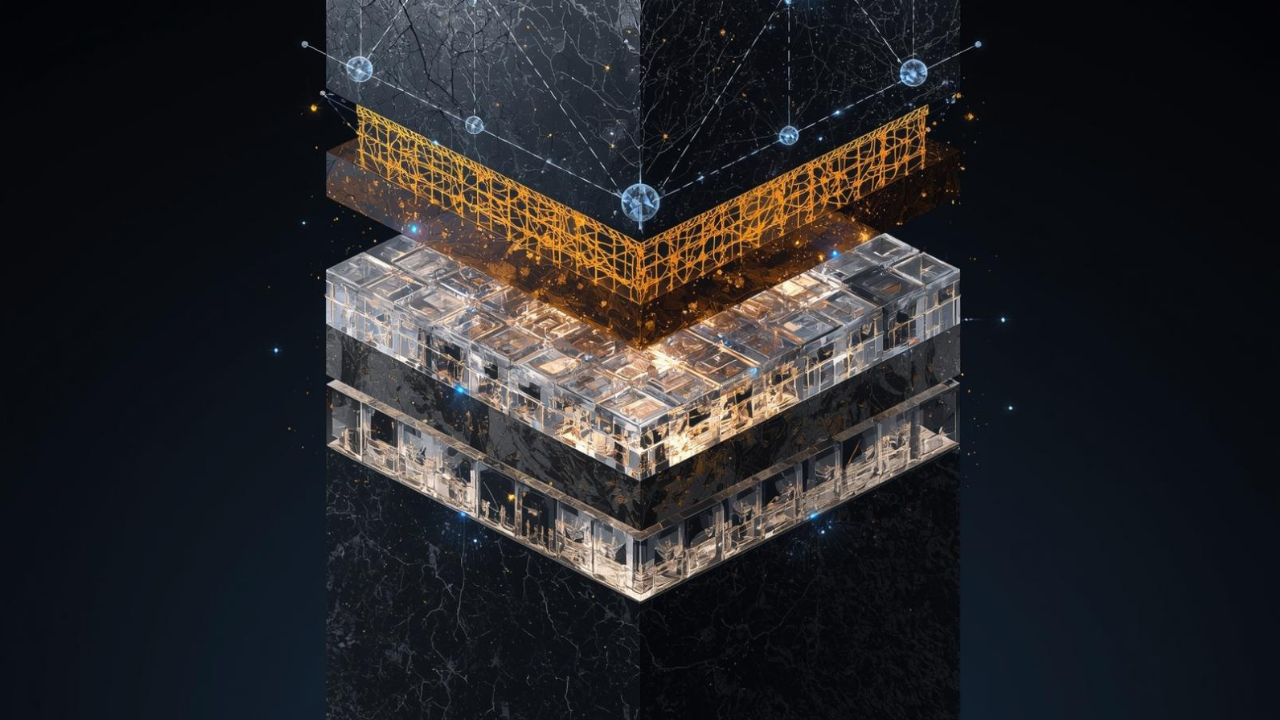

The Layers of Assurance

In this evolving landscape, the first layer is Input Assurance, establishing a non-negotiable foundation before any model parameters are tuned. The industry adage “garbage in, garbage out” has transformed into a more dangerous reality: “unverified data in, catastrophic decisions out.” For instance, an AI tasked with making clinical recommendations is rendered ineffective if the patient vitals it receives are outdated or incorrectly formatted. This layer focuses on ensuring data integrity, lineage, and fitness for purpose at the system level, encompassing key practices such as implementing robust schema management, real-time validation gates, and comprehensive observability of data flows.

The second layer, Model & Context Assurance, addresses the intersection of AI performance and the complex realities of operational contexts. A model may achieve a 99% accuracy rate on a controlled test set, yet fail operationally if it cannot recognize a regional dialect or adapt to new fraud patterns. This layer emphasizes continuous testing against a variety of scenarios and integrating compliance standards directly into the model development lifecycle, ensuring that AI behaves consistently across platforms and interfaces.

The final layer, Output & Action Assurance, is crucial, as it governs the transition from AI inference to actionable insights. Even if a model accurately flags an insurance claim as anomalous, if that alert becomes lost in a legacy ticketing system or automatically triggers a denial without an adequate explanation, the intended benefits are negated. This layer focuses on creating structured outputs that include decision rationales, implementing governed action gateways for high-stakes recommendations, and establishing feedback loops to continuously improve the model.

The overarching insight of 2026 is that these three layers of assurance cannot be treated as isolated silos. Collaboration between teams responsible for model validation and those managing the data pipelines is essential. Moreover, any changes in compliance regulations must cascade uniformly across input schemas, model fairness tests, and audit logs. Building an effective Assurance Stack demands an interdisciplinary approach, blending the rigor of Site Reliability Engineering with the practices of MLOps and Model Governance.

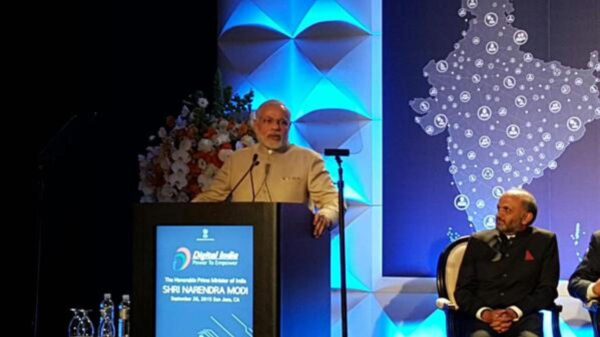

The organizations poised for success are those that recognize that the bottleneck in AI has shifted from a lack of intelligence to a scarcity of trustworthy integration. The future belongs not to those with the most sophisticated models, but to those who cultivate resilient, transparent, and assured pipelines—from raw data to actionable outcomes. This Assurance Stack is quickly becoming indispensable for the era of applied AI.

See also Infosys Expands Collaboration with ExxonMobil to Develop AI Cooling Systems for Data Centers

Infosys Expands Collaboration with ExxonMobil to Develop AI Cooling Systems for Data Centers Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse