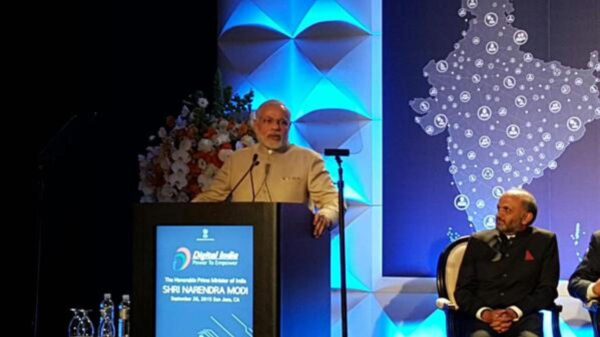

A sanctioned Chinese hacking group, known as APT31, has reportedly utilized Google’s AI chatbot, Gemini, to identify vulnerabilities and strategize cyberattacks against U.S. organizations, according to a statement from Google. Although there is no evidence that any of these cyber operations succeeded, John Hultquist, chief analyst at the Google Threat Intelligence Group, emphasized the ongoing experimentation by such groups with AI for semi-autonomous offensive operations. “We anticipate that China-based actors in particular will continue to build agentic approaches for cyber offensive scale,” he stated.

The activity was detailed in Google’s latest AI Threat Tracker report, shared in advance with The Register. APT31, which operates under various aliases including Violet Typhoon, Zirconium, and Judgment Panda, has previously exploited vulnerabilities in Microsoft SharePoint and faced U.S. sanctions in March 2024 following the criminal charges against seven of its members for hacking high-profile targets.

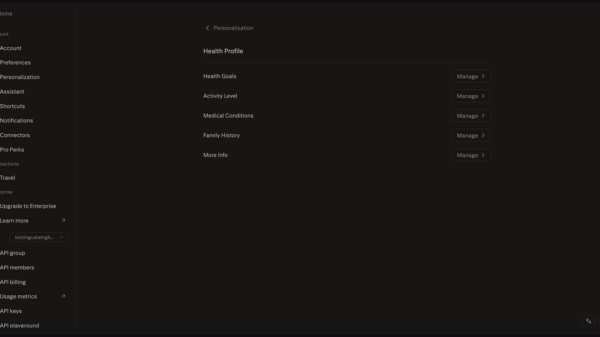

The group’s recent exploits using Gemini occurred late last year. They reportedly adopted a structured approach, prompting Gemini with a cybersecurity persona to automate vulnerability analysis and create targeted testing plans. This tactic was part of APT31’s efforts to enhance their operational efficiency, a significant development that Hultquist described as “the next shoe to drop.”

In a particularly noteworthy instance, APT31 employed an open-source tool named Hexstrike, which leverages the Model Context Protocol (MCP) to assess various exploits against U.S. targets. Hexstrike can execute over 150 security tools, facilitating capabilities such as network scanning and penetration testing, initially designed for ethical hackers to identify vulnerabilities. However, following its release in mid-August, criminal actors began repurposing it for malicious activities.

The integration of Hexstrike with Gemini automated intelligence gathering, allowing APT31 to pinpoint technological vulnerabilities and weaknesses in organizational defenses. Google’s report highlights that this blurs the line between routine security assessments and targeted malicious reconnaissance operations. In response to this misuse, Google has since disabled accounts associated with the campaign.

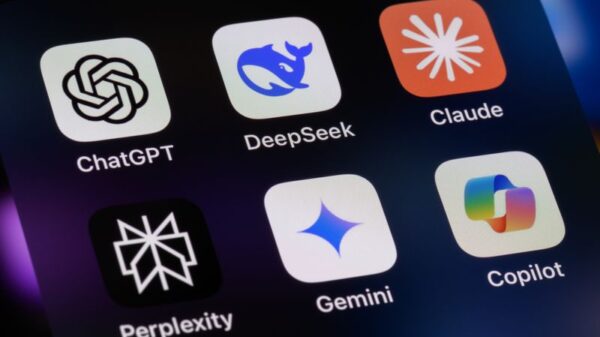

Hultquist outlined two primary concerns arising from this trend. Firstly, the ability of adversaries to operate across the intrusion cycle with reduced human intervention enables them to act swiftly, outpacing defenders. He referenced a prior report indicating that Chinese cyberspies had utilized the Claude Code AI tool from Anthropic to automate various stages of attacks, achieving success in some instances.

Secondly, the automation of vulnerability exploitation facilitates more efficient and extensive attacks, allowing adversaries to target multiple victims with minimal human oversight. This acceleration not only raises the stakes for cybersecurity defenses but also widens the “patch gap,” which is the time taken to deploy fixes after a vulnerability is discovered. “In some organizations, it takes weeks to implement defenses,” Hultquist pointed out, stressing the need for security professionals to rethink their strategies. He underscored that leveraging AI to respond and rectify security weaknesses more swiftly than traditional human methods is becoming imperative.

The report also noted a spike in attempts at model extraction, termed “distillation attacks,” where malicious actors seek to gain insights into AI models, potentially replicating valuable technology. Hultquist mentioned that these attempts originate from various threat actors globally, underscoring the significant intellectual property risks associated with AI models. “Your model is really valuable IP, and if you can distill the logic behind it, you can replicate that technology,” he stated.

As AI technologies evolve, their misuse by state-sponsored actors and cybercriminals presents a persistent challenge. Google’s findings indicate that both government-backed groups and private sector actors are keenly interested in harnessing the advantages of AI to enhance their operations. The ongoing situation underscores the critical need for robust cybersecurity measures that can adapt to the rapidly changing landscape of cyber threats.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks