Researchers at the University of California, Berkeley, have unveiled a groundbreaking approach to enhancing the reliability of machine learning models. In a study aimed at addressing the trustworthiness of learned models, the team proposes a new class of algorithms called **Self-Proving models**. These models aim to provide assurances regarding the correctness of their outputs through a mechanism known as Interactive Proofs, potentially transforming the landscape of artificial intelligence verification.

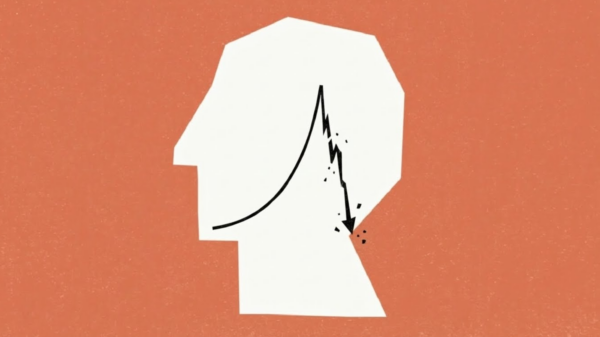

Traditionally, the accuracy of machine learning models has been gauged through averages across a range of inputs, providing little security for specific cases. This lack of individual verification raises concerns, particularly in high-stakes applications such as healthcare and autonomous vehicles, where incorrect outputs can have severe consequences. The Berkeley team’s study offers a solution by creating models that not only generate outputs but also substantiate their correctness through a verification algorithm, denoted as **V**.

The proposed Self-Proving models operate under a notable principle: with high probability, they will generate correct outputs for inputs sampled from a given distribution while also successfully proving that these outputs are accurate to the verifier. This dual-functionality ensures that the models provide a robust layer of assurance. With the soundness property of the verification algorithm V, any incorrect output can be flagged, allowing for high confidence in the model’s reliability.

The research highlights two key methodologies for developing Self-Proving models. The first, **Transcript Learning (TL)**, is centered on utilizing transcripts from interactions that have been accepted by the verifier. This allows the model to learn from past successful engagements, refining its ability to produce correct outputs. The second method, **Reinforcement Learning from Verifier Feedback (RLVF)**, enables models to learn through simulated interactions with the verifier, gradually improving performance based on the feedback received.

This innovative approach is poised to address long-standing issues in AI accountability and transparency. By proving the correctness of outputs, Self-Proving models could help mitigate risks associated with deploying AI systems in sensitive domains. For instance, in medical diagnostics, such systems could reassure practitioners that their decisions are backed by reliable algorithms, thereby enhancing trust in AI-assisted technologies.

The proposed model’s potential applications extend beyond healthcare. Industries such as finance, where trust in algorithms is paramount, could benefit significantly from this technology. As machine learning continues to integrate into various sectors, the development of mechanisms that ensure model correctness becomes increasingly vital.

In summary, the research from UC Berkeley represents a significant step forward in the quest for trustworthy AI systems. By leveraging the principles of Interactive Proofs, Self-Proving models could redefine standards for reliability in machine learning, fostering greater acceptance and deployment of AI technologies across diverse industries. As these models continue to evolve, they may not only enhance the integrity of AI outputs but also pave the way for more ethical and responsible use of artificial intelligence in society.

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions