Artificial intelligence is reshaping the landscape of cybersecurity, making cyberattacks more accessible and cost-effective for malicious actors, according to a recent study by cloud security startup Wiz and AI security lab Irregular. The findings underscore a significant shift in the economic dynamics of cybercrime, where AI-driven attacks can now be executed for as little as $50, a stark contrast to the nearly $100,000 typically spent on human-driven efforts to identify security vulnerabilities.

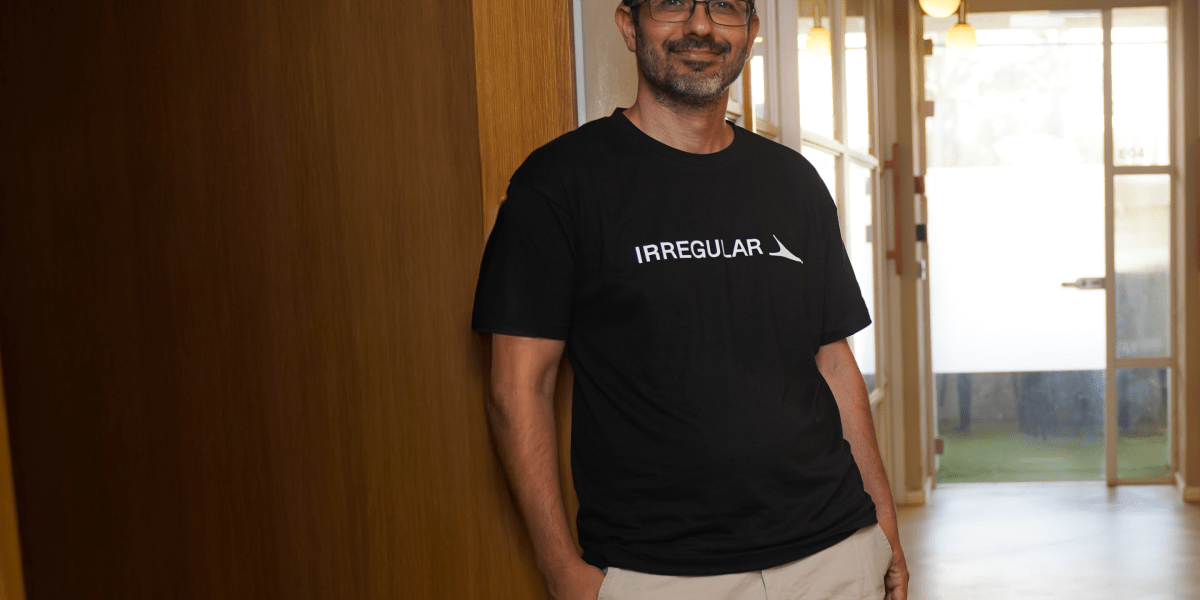

In an interview, Gal Nagli, head of threat exposure at Wiz, and Omer Nevo, cofounder and CTO of Irregular, detailed the implications of their joint research, which challenges traditional perceptions of the costs associated with cyberattacks. AI agents successfully completed complex offensive security tasks in controlled environments, solving 90% of real-world attack scenarios modeled in their tests. Nevo noted that even seasoned cybersecurity professionals were taken aback by the rapid capabilities of AI models, particularly in managing multi-step challenges without losing focus. “We’re seeing more and more that models are able to solve challenges that are genuine expert level,” he said.

This emergence of affordable cyber threats is compounded by the increasing involvement of non-technical employees in developing applications using accessible coding tools like Anthropic’s Claude Code and OpenAI’s Codex. As Nagli pointed out, these individuals often lack essential security knowledge, creating a vast attack surface that cybercriminals are poised to exploit. “They don’t know anything about security,” he explained, “and they use sensitive data exposed to the public Internet, and then they are super easy to exploit.”

The research indicates that the traditional cat-and-mouse game of cybersecurity is no longer limited by cost barriers. Hackers are no longer required to selectively target their efforts; AI-driven tools enable them to probe vulnerabilities across numerous systems for just a few dollars. This new economic reality implies that every exposed system is a potential target, raising the stakes for organizations, regardless of size.

While the study noted a drop in performance and a doubling of costs in more realistic conditions, the overarching message is clear—cyberattacks are becoming cheaper and faster to deploy. Nevo cautioned that if AI can conduct sophisticated attacks at scale, the potential for harm could increase dramatically. “If we reach the point where AI is able to conduct sophisticated attacks, and it’s able to do that at scale, suddenly a lot more people will be exposed,” he warned, prompting a need for greater cybersecurity awareness across industries.

In light of this evolving threat landscape, the integration of AI into defensive strategies is increasingly crucial. Nevo raised an important question: “Are we helping defenders utilize AI fast enough to be able to keep up with what offensive actors are already doing?” The urgency of addressing this imbalance is paramount as organizations navigate a new era of technological vulnerability.

In other AI-related news, a U.S. lawmaker has raised concerns regarding Nvidia‘s alleged assistance to the Chinese AI startup DeepSeek, suggesting that the company aided in refining AI models subsequently used by the Chinese military. This revelation has sparked discussions about the need for stricter export controls to prevent American technology from being repurposed for military applications.

Additionally, Dow Chemical announced plans to cut 4,500 jobs as part of an AI-driven overhaul aimed at enhancing productivity amid rising costs and declining revenue. The company’s “Transform to Outperform” initiative is expected to generate significant operational earnings while incurring considerable one-time charges, including severance costs. This move follows a broader trend of corporate layoffs across industries as companies pivot toward AI and automation.

Finally, Anthropic has started a controversial project called “Project Panama,” involving the destructive scanning of millions of books to gather data for training AI models. Internal documents suggest that the company has invested heavily in acquiring physical books, raising ethical questions about copyright and the lengths to which AI companies will go to secure quality data.

As the landscape of cybersecurity continues to evolve with AI, the interplay of offensive and defensive capabilities will shape future strategies and policies. The implications of these developments extend beyond corporate boardrooms, signaling a need for comprehensive awareness and adaptation in a world increasingly susceptible to AI-driven threats.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks