State-sponsored hackers are increasingly using advanced artificial intelligence tools, including Google’s Gemini, to enhance their cyberattack strategies. A recent report from Google’s Threat Intelligence Group (GTIG) indicates that these actors, primarily from Iran, North Korea, China, and Russia, have integrated AI into various stages of the attack lifecycle, including reconnaissance, social engineering, and malware development. The findings are part of GTIG’s quarterly report, which highlights the evolving landscape of cyber threats as of the fourth quarter of 2025.

The report underscores that for state-sponsored threat actors, large language models have become vital for conducting technical research, targeting, and quickly generating sophisticated phishing attempts. GTIG researchers noted, “For government-backed threat actors, large language models have become essential tools for technical research, targeting, and the rapid generation of nuanced phishing lures.”

Among the groups leveraging these sophisticated tools is the Iranian hacking group APT42, which has reportedly utilized Gemini to enhance its reconnaissance and targeted social engineering strategies. The group created seemingly legitimate email addresses and conducted thorough research to establish credible narratives for engaging their targets. APT42’s tactics involved crafting believable personas and scenarios that improved their chances of success, effectively circumventing traditional phishing detection measures by employing natural language variations.

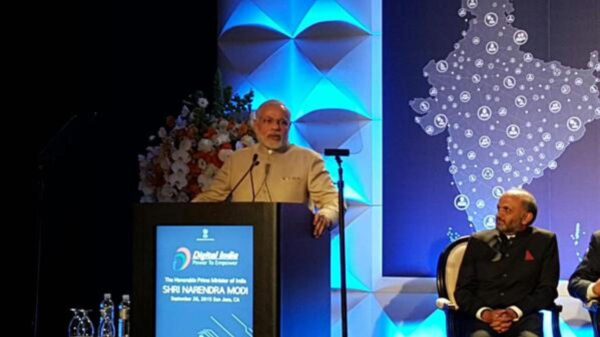

Similarly, North Korean actor UNC2970, which specializes in targeting the defense sector and impersonating corporate recruiters, has utilized Gemini for profiling high-value targets. The group’s reconnaissance efforts included gathering information about major cybersecurity and defense firms, mapping specific technical job roles, and even collecting salary data. GTIG remarked that this activity complicates the line between routine professional research and malicious intent, as the group assembles tailored phishing personas with high fidelity.

In addition to operational misuse, the report highlighted a rise in model extraction attempts, or “distillation attacks,” aimed at stealing intellectual property from AI models. One notable campaign targeting Gemini’s reasoning capabilities involved over 100,000 prompts designed to elicit responses from the model. This suggests an effort to replicate Gemini’s reasoning in non-English languages, thereby broadening the potential impact of such attacks.

While GTIG has not observed direct attacks on advanced models by persistent threat actors, it has identified numerous model extraction attempts from private sector entities globally, as well as researchers aiming to clone proprietary logic. Google’s systems have managed to detect these attacks in real-time and have implemented defenses to protect internal reasoning processes.

Furthermore, the report highlighted the emergence of AI-integrated malware, specifically identified as HONESTCUE, which utilizes Gemini’s API for functionality generation. This malware employs a complex obfuscation technique to evade traditional network detection methods. HONESTCUE operates as a downloader and launcher framework, using Gemini’s API to send prompts and receive C# source code as responses. The second stage of the attack compiles and executes payloads directly in memory, leaving no trace on disk.

Another threat identified by GTIG was COINBAIT, a phishing kit likely accelerated by AI code generation tools. This kit masquerades as a major cryptocurrency exchange for credential harvesting, built using the AI-powered platform Lovable AI.

A novel social engineering campaign, observed in December 2025, saw threat actors exploit the public sharing features of generative AI services, including Gemini and ChatGPT, to host deceptive content. This campaign involved embedding malicious command-line scripts within seemingly benign instructions for common computer tasks, thus manipulating AI models to facilitate the distribution of ATOMIC malware targeting macOS systems.

In the realm of the underground marketplace, GTIG found a persistent demand for AI-enabled tools and services. However, state-sponsored hackers and cybercriminals often struggle to develop their own AI models, instead relying on commercial products accessed through stolen credentials. One kit, dubbed Xanthorox, was marketed as a custom AI for autonomous malware generation but was actually based on several commercial AI products, including Gemini.

In response to these escalating threats, Google has taken proactive measures against identified malicious actors by disabling accounts and assets associated with harmful activities. The company has also enhanced its defensive intelligence, improving classifiers and models to mitigate future misuse. “We are committed to developing AI boldly and responsibly, which means taking proactive steps to disrupt malicious activities,” the report stated.

As the landscape of cyber threats continues to evolve, the findings serve as a critical reminder for enterprise security teams—particularly in the Asia-Pacific region, where active threats from Chinese and North Korean state-sponsored actors persist—to strengthen defenses against AI-augmented social engineering and reconnaissance operations. The intersection of AI and cybersecurity will likely remain a focal point as both defenders and attackers vie for the upper hand in this rapidly changing environment.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks