NEW YORK – In a brightly lit classroom in suburban Ohio, a seventh-grade teacher utilizes an AI-powered platform to generate personalized math problems for her students, a tool designed to close learning gaps and enable more one-on-one instruction. This scenario is becoming increasingly common across the United States as school districts, buoyed by post-pandemic technology budgets and grappling with teacher shortages, race to incorporate artificial intelligence into daily education. Tech giants and an emerging wave of EdTech startups are promoting AI as a solution for everything from administrative burdens to tailored learning experiences.

However, a growing coalition of educators, civil rights advocates, and policy experts is issuing a stark warning: the rapid adoption of these powerful but untested technologies may introduce significant risks into the nation’s schools. Recent analyses highlight potential harms—such as pervasive student surveillance, algorithmic bias, and the erosion of critical thinking skills—that could outweigh the promised benefits. The discourse has shifted from fears of cheating to profound questions about the essence of learning in an era dominated by intelligent machines.

At the core of these concerns lies the data that powers AI systems. A comprehensive report from the Center for Democracy & Technology (CDT) argues that the allure of AI in education can be misleading, often obscuring serious risks to students. The report, titled “Hidden Harms: The Misleading Promise of AI in Education,” reveals how AI tools may systematically disadvantage students from marginalized backgrounds. For instance, automated essay graders can favor language patterns typical of affluent, native English speakers, while AI-driven proctoring software might misinterpret the physical tics of students with disabilities or the skin tones of Black and brown students as indicators of cheating.

This issue of embedded prejudice is not merely hypothetical. Many AI systems are trained on extensive datasets reflecting existing societal biases, and when applied in educational contexts, they can perpetuate and exacerbate inequities. The American Civil Liberties Union has cautioned that, without stringent oversight, AI tools could create discriminatory feedback loops that further penalize already disadvantaged students, as outlined in its report, “How Artificial Intelligence Can Deepen Racial and Economic Inequity.” For school administrators, this responsibility poses a significant legal and ethical dilemma: ensuring that tools intended to assist do not inadvertently harm segments of their student population.

In addition to bias and privacy issues, some educators are voicing concerns over the long-term pedagogical ramifications of relying on machines for cognitive tasks. While AI can assist students in organizing thoughts or checking grammar, an over-reliance on generative AI for writing and problem-solving may undermine the very competencies that education aims to cultivate: critical thinking, intellectual struggle, and creative synthesis. When students can generate a satisfactory five-paragraph essay with a simple prompt, their motivation to understand the underlying processes of research, argumentation, and composition diminishes.

This challenge is further complicated by the “black box” nature of many AI models. Often, neither teachers nor students fully comprehend how the AI arrived at a particular conclusion, whether it be a grade on an assignment or a recommended learning path. This lack of transparency contradicts educational goals that emphasize demonstrating one’s work. The U.S. Department of Education, in its report “Artificial Intelligence and the Future of Teaching and Learning,” recognizes the potential advantages of AI but strongly advocates for maintaining a “human in the loop” for all significant educational decisions, underscoring that technology should complement, not replace, the essential role of educators.

Global Perspectives and Local Implications

The concerns echoing through American classrooms are part of a wider, global dialogue. UNESCO, the United Nations’ educational and cultural agency, has issued its own warnings, urging governments and educational institutions to prioritize safety, inclusion, and equity in their AI strategies. Its “Guidance for generative AI in education and research” promotes a human-centered approach, cautioning against a technologically deterministic perspective that prioritizes efficiency over human development. This guidance highlights the risk of standardizing education and undermining the cultural and linguistic diversity that human educators bring to their classrooms.

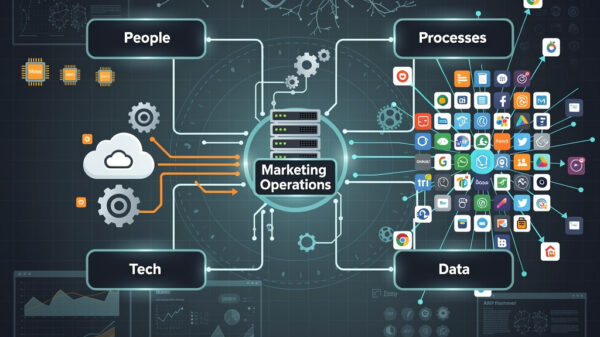

This international viewpoint emphasizes the gravity of the situation. Decisions made today in procurement offices from Los Angeles to Long Island will have lasting repercussions for future generations of students. As districts enter multi-year contracts with EdTech vendors, they are not merely acquiring software; they are endorsing a specific vision for the future of learning. Critics contend that without a more robust framework for evaluating these tools for effectiveness, bias, and safety, schools are subjecting students to a large-scale, high-stakes experiment.

The rise of AI tools also poses the risk of creating a new and insidious digital divide. Wealthier districts can afford to invest in quality AI platforms and, crucially, the professional development required for effective implementation. In contrast, underfunded districts may resort to free, ad-supported versions that offer weaker privacy protections or lack adequate teacher training, ultimately widening the achievement gap these technologies are purported to address.

A counter-movement advocating for “digital sanity” is emerging among educators and parent groups. This initiative promotes a more thoughtful and critical approach to technology implementation. As noted by EdSurge, this movement is not anti-technology but pro-pedagogy, insisting that any new tool—AI or otherwise—must demonstrate clear educational value before being introduced to students. Advocates urge district leaders to slow down, pilot programs on a smaller scale, and involve teachers and parents in the decision-making process instead of accepting top-down mandates driven by vendor marketing.

As the tension between the transformative potential of AI and its documented dangers continues to grow, school leaders find themselves in a challenging position. An outright ban on technology seems impractical in a world where AI is becoming increasingly prevalent. Yet, moving forward with unchecked optimism may compromise the responsibility to protect students. The primary task for districts is to transition from mere adoption to rigorous accountability.

This involves demanding difficult answers from vendors: How was your algorithm trained? What measures have been implemented to mitigate bias? Where is student data stored, who has access to it, and how is it utilized? Ultimately, the integration of AI in schools must not be solely a technological imperative; it should be an educational commitment, guided by fundamental principles of equity, safety, and the enduring objective of nurturing thoughtful, capable, and creative human beings.

See also Andrew Ng Advocates for Coding Skills Amid AI Evolution in Tech

Andrew Ng Advocates for Coding Skills Amid AI Evolution in Tech AI’s Growing Influence in Higher Education: Balancing Innovation and Critical Thinking

AI’s Growing Influence in Higher Education: Balancing Innovation and Critical Thinking AI in English Language Education: 6 Principles for Ethical Use and Human-Centered Solutions

AI in English Language Education: 6 Principles for Ethical Use and Human-Centered Solutions Ghana’s Ministry of Education Launches AI Curriculum, Training 68,000 Teachers by 2025

Ghana’s Ministry of Education Launches AI Curriculum, Training 68,000 Teachers by 2025 57% of Special Educators Use AI for IEPs, Raising Legal and Ethical Concerns

57% of Special Educators Use AI for IEPs, Raising Legal and Ethical Concerns