Researchers from Huawei Noah’s Ark Lab and Peking University are paving the way for a significant advancement in natural language processing with their exploration of Diffusion Language Models (DLMs). This innovative approach shifts away from the traditional auto-regressive models, which generate text sequentially, to a more holistic, bidirectional method. In their latest study, led by Yunhe Wang, Kai Han, and Huiling Zhen, the team highlights ten critical challenges that currently hinder the full realization of DLM capabilities, aiming to develop models that can potentially outperform established systems like GPT-4.

The researchers identify several key obstacles, including architectural constraints and issues with gradient sparsity, which affect the models’ capabilities in complex reasoning. They propose a four-pillar roadmap focusing on foundational infrastructure, algorithmic optimization, cognitive reasoning, and unified intelligence, advocating for a transition to a “diffusion-native” ecosystem. This shift aims to facilitate next-generation language models that can adeptly perform dynamic self-correction and possess a sophisticated understanding of structure.

One of the significant innovations discussed is the concept of multi-scale tokenization, which allows for non-sequential generation and flexible text editing. This method contrasts sharply with the causal horizon limitations posed by traditional models. The researchers contend that DLMs, in their current iteration, are constrained by outdated frameworks, which inhibit their efficiency and reasoning capabilities. Their findings suggest that refining the data distribution modeling within DLMs, particularly through the iterative addition of noise to original data, can enhance their performance.

Despite their theoretical advantages, adapting diffusion techniques to the discrete domain of language presents unique challenges, particularly in defining concepts such as “noise” and “denoising” for structured text. The research emphasizes that establishing a native ecosystem, tailored for iterative non-causal refinement, is essential for unlocking the potential of DLMs. It also points to the need for rethinking foundational infrastructure, advocating for architectures that prioritize inference efficiency beyond traditional techniques, such as key-value caching.

Moreover, existing tokenization methods, such as Byte Pair Encoding (BPE), have been found lacking in structural hierarchy, which is a crucial aspect of human thought. The study emphasizes the importance of multi-scale tokenization, enabling DLMs to effectively balance computational resources between semantic structuring and lexical refinement. However, experiments have shown that current DLMs face challenges with inference throughput, particularly for tasks requiring multiple revisions of evolving data. Without a diffusion-native inference model, iterative processing may become too resource-intensive.

The research team also addresses the issue of gradient sparsity during long-sequence pre-training, revealing that current training methods often focus on a limited subset of masked tokens. This approach leads to inefficient gradient feedback and complicates the adaptation and alignment process during downstream tasks. The researchers propose new advanced masking techniques that account for the varying importance of different tokens, enhancing the model’s ability to perform complex reasoning tasks.

A notable aspect of the study is the criticism of fixed output lengths in DLMs. Unlike traditional models that use End-of-Sequence tokens for natural termination, the authors argue that adaptive termination is critical for computational efficiency. Their proposed methods for determining optimal output lengths aim to prevent problems such as “hallucinatory padding” or information loss, further enhancing model robustness.

The researchers conclude that existing datasets do not adequately support the learning of global semantic “anchors,” which are vital for developing structural intelligence in DLMs—an advantage seen in image processing models. Measurements confirm that while DLMs theoretically allow for parallel generation, their iterative denoising process can result in higher latency compared to auto-regressive models. The study reveals that increasing batch sizes may counteract the speed advantages of diffusion techniques due to overheads associated with global attention, underscoring the necessity for resource-efficient optimization strategies.

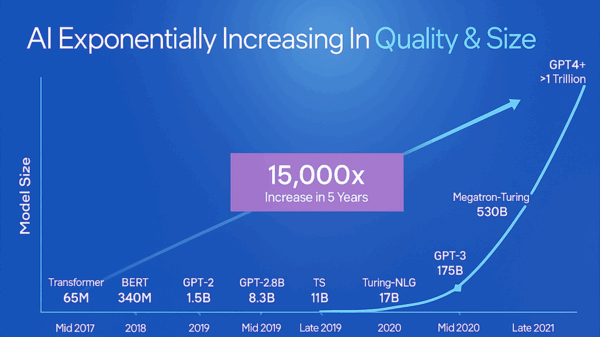

In light of these findings, the researchers call for a departure from traditional prefix-based prompting to a model of “Diffusion-Native Prompting.” This method allows for prompts to be interleaved during generation or to serve as global constraints, emphasizing the need for a standardized framework to effectively utilize DLMs in complex applications. By addressing these challenges, the researchers aim to unlock a “GPT-4 moment” for diffusion-based models, setting the stage for advancements in natural language processing that could significantly enhance the capabilities of future language models.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature