Fujitsu’s Dippu Singh discusses the pitfalls of checklist governance in relation to the EU AI Act, emphasizing the growing need for a more comprehensive approach to AI compliance. This commentary originally appeared in Insight Jam, an enterprise IT community focused on AI.

The enforcement of the EU AI Act marks a significant shift in how organizations must approach artificial intelligence. The era of “move fast and break things” has come to an end, with the new mantra becoming “prove it is safe, or don’t deploy it.” However, many enterprise leaders are misinterpreting this paradigm change. They often see AI compliance as merely a bureaucratic hurdle, relegated to legal teams and risk officers equipped with spreadsheets. This perspective could pose serious risks.

The EU AI Act, ISO 42001, and other developing global standards require more than improved documentation; they demand observable engineering realities. The gap between ethical principles set forth by law and the actual behavior of AI models represents a critical challenge for AI adoption. Organizations are rushing to adopt AI governance tools but often find themselves ensnared in a compliance trap, relying on static checklists that fail to account for the dynamic nature of modern large language models (LLMs).

To navigate this tightening regulatory environment, Chief Data Officers and AI leaders must transition from a focus on normative assessments—essentially legalistic checklists—to an “Ethics by Design” approach that emphasizes the architecture of AI systems.

The Need for Contextual Risk Assessment

The present landscape of governance tools is divided into two main categories: normative assessment tools and technical assessment tools. Normative assessment tools function as digital checklists, asking questions such as “Have you considered fairness?” or “Is there human oversight?” While necessary for compliance documentation, they provide no real insight into engineering practices. Conversely, technical assessment tools focus on metric-driven evaluations, such as toxicity classifiers and accuracy scores, but they often lack the contextual understanding required for enterprise applications.

The real opportunity lies in developing context-aware risk assessment frameworks that translate vague legal requirements into actionable technical checks. For example, a generic fairness checklist question may ask, “Did you establish procedures to avoid bias?” A more contextually aware approach would specify, “Did credit managers consult past loan history for gender balance during data preprocessing?” Such granularity is crucial for avoiding potential legal pitfalls and creating a defendable audit trail.

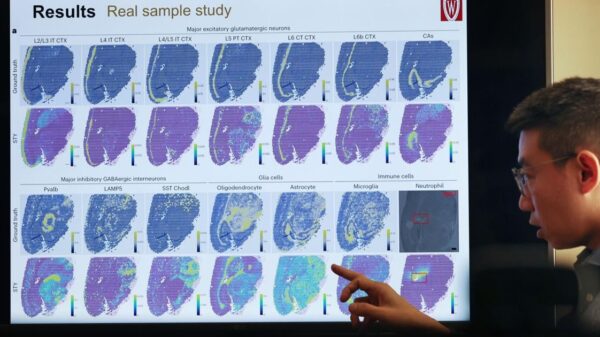

Another challenge lies in the technology itself. Large language models can be difficult to audit due to the subtleties in their outputs. Standard benchmarking often emphasizes binary classifications, which may overlook biases that manifest under high-context reasoning conditions. Recent research highlights that biases can be hidden in abstract language, as demonstrated by testing models with proverbs or idioms. For instance, a model might exhibit gender biases when interpreting phrases that refer to authority and accountability, revealing inconsistencies that compliance tools cannot detect.

This inability to identify latent biases calls for a new architecture focused on bias diagnosis, employing rank-based evaluation metrics to gauge model performance across diverse scenarios. If organizations are deploying generative AI agents for customer interactions, they require sophisticated diagnostic layers to evaluate moral reasoning capabilities in culturally nuanced contexts.

AI leadership must shift its perspective regarding the EU AI Act, viewing it not as a constraint but as a specification for quality control. The technologies needed to comply with regulators—such as traceability, bias diagnosis, and impact assessment—are the same technologies that can help build reliable products. A model that exhibits gender bias in a proverb test is indicative of broader reasoning instabilities.

To prepare for the evolving compliance landscape, leaders should focus on three architectural imperatives. First, governance must be integrated into the MLOps pipeline, serving as a gatekeeper that blocks deployment if ethical design criteria are not met. Second, organizations need to contextualize risks rather than rely on universal checklists, investing in systems capable of parsing specific architectures to generate targeted risk controls. Lastly, leaders should stress-test models for nuanced issues, moving beyond public benchmarks to implement active cleaning and high-context diagnostic tools that capture edge cases often missed in standard testing.

The EU AI Act fundamentally challenges organizations to align their operational practices with the values they profess. Leaders who navigate this landscape effectively will not only meet regulatory demands but will also enhance their market position. In contrast, those who remain entrenched in outdated compliance practices risk finding themselves explaining their algorithms in court.