A recent study by researchers at the Massachusetts Institute of Technology (MIT) has revealed that individuals are more inclined to trust medical advice provided by artificial intelligence (AI) than that offered by human doctors. This research, published in the *New England Journal of Medicine*, involved 300 participants who assessed medical responses generated by either a physician or an AI model, such as ChatGPT.

Participants, comprising both experts and non-experts in the medical field, rated the AI-generated responses as more accurate, valid, trustworthy, and complete. Notably, neither group demonstrated a reliable ability to differentiate between the AI-generated content and responses from human doctors. This raises concerns that participants may favor AI outputs, even when such information could be inaccurate.

The study also highlighted a troubling tendency among participants to accept low-accuracy AI-generated advice as valid and trustworthy. This inclination resulted in a significant likelihood of individuals following potentially harmful medical recommendations, leading to unnecessary medical interventions. Such findings underscore the risks associated with misinformation from AI systems in healthcare, which could adversely impact patients’ health and well-being.

Documented cases of AI providing harmful medical advice further illustrate these dangers. In one instance, a 35-year-old Moroccan man required emergency medical attention after a chatbot instructed him to wrap rubber bands around his hemorrhoid. In another case, a 60-year-old man suffered poisoning after ChatGPT suggested ingesting sodium bromide as a means to lower his salt intake. These episodes serve as stark reminders of the potential hazards inherent in relying on AI for medical guidance.

Dr. Darren Lebl, research service chief of spine surgery at the Hospital for Special Surgery in New York, has raised concerns regarding the quality of AI-generated medical recommendations. He noted that many suggestions from such systems lack credible scientific backing. “About a quarter of them were made up,” he stated, emphasizing the inaccuracies and risks associated with trusting AI for healthcare advice.

This research adds to the growing body of evidence suggesting that while AI technology has the potential to revolutionize various industries, its application in sensitive fields like healthcare must be approached with caution. The propensity of individuals to trust AI-generated medical advice raises questions about the reliability and accountability of these systems, particularly in high-stakes situations involving health and safety.

As AI continues to evolve and integrate into everyday life, the implications for healthcare are profound. The reliance on AI for medical advice may not only affect decision-making processes among patients but also complicate the traditional roles of healthcare professionals. Moving forward, it will be essential to establish guidelines and frameworks that ensure the responsible use of AI in medicine, safeguarding against misinformation while harnessing its potential benefits.

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

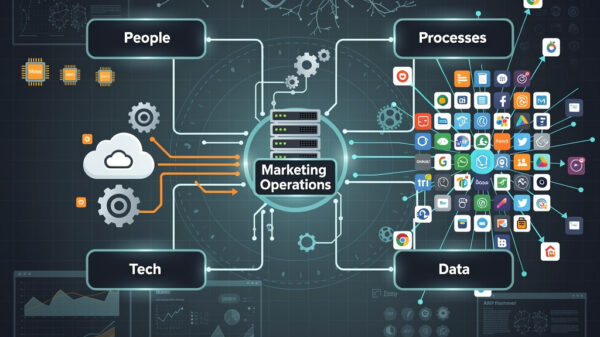

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions