California legislators have taken a significant step towards establishing safety standards for artificial intelligence with the passage of the Voluntary AI Standards Act by the California Senate on January 28, 2026. This legislation, identified as SB 813, aims to ensure the responsible development of AI technologies by creating independent panels composed of AI experts, academics, and government officials. These panels will be tasked with developing comprehensive safety standards tailored to the evolving landscape of artificial intelligence.

The proposed California Artificial Intelligence Standards and Safety Commission will be integral to this initiative, as it is set to certify and monitor AI developers and vendors who choose to adhere to the newly established standards. This voluntary approach signifies a proactive effort to foster accountability within the AI sector, which has faced increasing scrutiny over ethical implications and safety concerns.

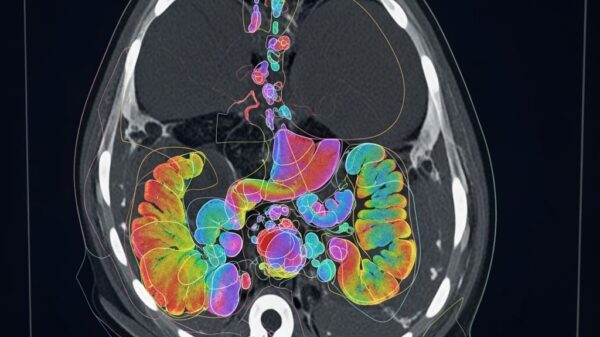

As AI continues to permeate various facets of society, from healthcare to finance, the need for regulations that prioritize safety and ethical use becomes more pressing. The establishment of such standards is seen as a crucial move to prevent potential misuse of AI technologies. Proponents argue that without a framework for accountability, the risks associated with AI—ranging from algorithmic bias to privacy violations—could escalate.

The creation of this commission aligns with broader trends observed globally, where governments and organizations are grappling with the implications of rapid advancements in AI. Countries across Europe and Asia have begun implementing similar regulatory measures aimed at ensuring the responsible development and deployment of AI systems. In this context, California’s initiative may serve as a model for other regions looking to enact effective legislation in the face of technological innovation.

In light of the increasing complexity and capabilities of AI systems, the independent panels outlined in SB 813 will be crucial in developing safety standards that are not only robust but also adaptable to future advancements. This adaptability is essential, as the pace of innovation in AI often outstrips regulatory frameworks, leaving potential gaps in oversight.

The voluntary nature of the standards proposed by the California Senate allows for flexibility, enabling developers to engage with the regulatory process without facing immediate penalties. This approach may encourage more companies to participate in the certification process, fostering a culture of compliance and responsibility within the industry. However, some critics express concern that voluntary standards may not be sufficient to address the serious risks posed by unregulated AI applications.

As this legislation moves forward, the implications for AI development and deployment are significant. Companies that proactively adopt these standards may find themselves at an advantage in the marketplace, as consumers and stakeholders increasingly prioritize ethical practices and safety in technology. Moreover, the establishment of a recognized set of standards could enhance public trust in AI technologies, which has been a critical hurdle for widespread acceptance.

Looking ahead, the outcomes of this initiative will likely influence not only the regulatory landscape in California but also the conversation around AI governance on a national level. As other states observe California’s approach, a ripple effect could emerge, prompting similar legislative efforts elsewhere in the United States.

The passage of the Voluntary AI Standards Act underscores the urgent need for responsible AI development in an era where technology is rapidly evolving. By laying the groundwork for safety standards, California is positioning itself as a leader in the ongoing dialogue about the ethical implications of artificial intelligence.

For further information, visit the official sites of the California Government and the California Legislative Information.

See also Google’s Ex-G Engineer’s AI Secrets Found Lacking Value, Jury Hears

Google’s Ex-G Engineer’s AI Secrets Found Lacking Value, Jury Hears Class Action Filed Against Musk’s xAI Over Grok’s Nonconsensual Deepfake Scandal

Class Action Filed Against Musk’s xAI Over Grok’s Nonconsensual Deepfake Scandal Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032