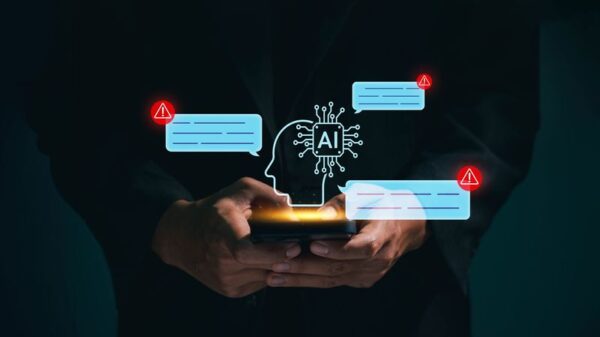

India has strengthened its AI regulation through amendments to the IT (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, effective February 20. The revised rules mandate prominent labeling of AI-generated content and introduce expedited takedown timelines as short as two to three hours.

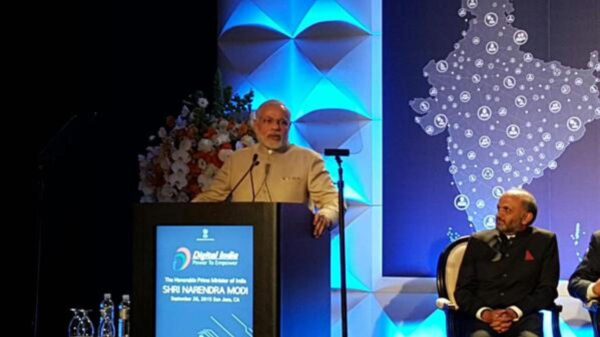

The Ministry of Electronics and Information Technology (MeitY) has formally notified changes to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, expanding compliance obligations for social media intermediaries, particularly regarding AI-generated content. While the central government has relaxed certain earlier proposals concerning AI labeling requirements, it has simultaneously imposed significantly stricter timelines for the removal of unlawful content. The 2026 IT amendments, via gazette notification number G.S.R. 120(E), will come into force on February 20, 2026.

India does not regulate AI as a standalone technology but focuses on the outputs of AI systems hosted, transmitted, or enabled by digital intermediaries that violate Indian law. The new compliance obligations include addressing synthetic or AI-generated content, deepfakes, non-consensual sensitive imagery, misleading and harmful content, and expedited removal timelines.

The revised rules impose explicit labeling obligations. Online platforms must clearly and prominently label AI-generated or synthetically generated content, ensuring that these labels cannot be removed, altered, or suppressed. They must also obtain user declarations when content has been created or materially altered using AI systems and implement technical measures to verify and track the origin of AI content. Although an earlier proposal requiring AI labels to occupy 10 percent of screen space has been withdrawn, the requirement of “prominence” remains enforceable, compelling platforms to ensure that disclosures are conspicuous and accessible.

India has introduced aggressive takedown timelines for content. Non-consensual intimate imagery, including AI-generated deepfake imagery, must be removed within two hours, while other unlawful content, including AI-generated misinformation, has a three-hour limit. Privacy or impersonation complaints must be addressed within 24 hours, and grievance resolutions within 72 hours. These timelines apply irrespective of whether the content is AI-generated or manually created, creating significant operational risks for platforms if they fail to act promptly.

Failure to act within the prescribed timelines may result in the loss of safe harbor protection, criminal liability exposure, blocking orders under Section 69A, and regulatory enforcement actions. For AI platforms, detection latency represents a critical legal risk factor. Under the IT Act, AI-generated content is governed by two primary laws: the Information Technology (IT) Act, 2000, and the IT (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021. Section 79 of the IT Act provides legal protection to intermediaries against liability for third-party content, provided they comply with due diligence requirements.

Safe harbor protection under Section 79 is contingent upon intermediaries taking appropriate actions to prevent unlawful content and removing it promptly upon gaining knowledge. The regulatory framework targets unlawful AI-generated outputs rather than banning AI systems outright. This scrutiny intensifies when AI outputs impersonate individuals, fabricate real-world events, disseminate misinformation, or produce non-consensual intimate imagery.

Significant Social Media Intermediaries (SSMIs) must comply with enhanced requirements, including appointing a Chief Compliance Officer and a Nodal Contact Person for law enforcement coordination, publishing monthly compliance reports, and enabling traceability of message originators when legally mandated. This introduces potential personal liability for local compliance officers.

Recent enforcement trends indicate a shift towards stringent actions against platforms facilitating unlawful content, including directives to disable services for non-consensual imagery and blocking of websites hosting child sexual abuse material. The regulatory approach is primarily executive-driven and enforcement-oriented, highlighting the need for platforms to adopt robust compliance frameworks that are not merely reactive.

As India’s regulatory environment for AI evolves, multinational technology companies must reassess their compliance strategies. The new rules demand that AI misuse detection systems operate continuously and be integrated into product design, ensuring mechanisms for real-time moderation and rapid response to unlawful content. For foreign companies, developing India-dedicated moderation pipelines and local compliance infrastructure is essential to navigate the increasing scrutiny and regulatory risks associated with AI content.

In conclusion, India’s regulatory model does not ban AI-generated content but subjects it to rigorous accountability standards. The mandatory labeling, compressed removal timelines, proactive safeguard requirements, and conditional safe harbor create a high-compliance environment. Companies operating in India must be prepared to meet these legal demands to ensure that their platforms remain compliant and mitigate risks associated with AI-generated content.

For tailored guidance on India’s IT Rules and AI regulation, contact the advisory team at: [email protected].