OpenAI is set to introduce multiple updates to its code model Codex in the coming weeks, accompanied by a caution regarding the model’s capabilities. CEO Sam Altman announced via X that these new features will be released next week. This will mark the first time the model reaches the “High” cybersecurity risk level in OpenAI’s risk framework, with only the “Critical” level positioned above it.

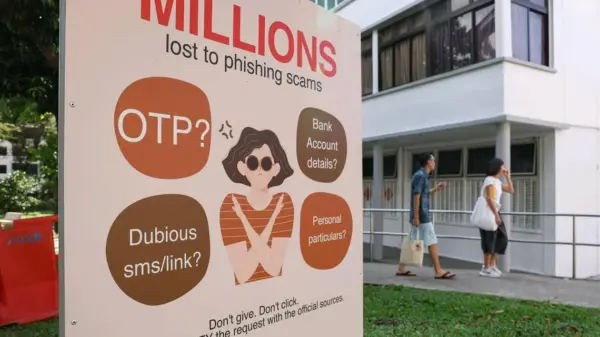

The “High” risk designation indicates that an AI model can facilitate cyberattacks by automating operations against well-defended targets or identifying security vulnerabilities. Such advancements could disrupt the delicate equilibrium between cyberattacks and defenses, leading to a significant uptick in cybercriminal activities.

Under OpenAI’s guidelines, the “High” level denotes that the model can aid in developing tools and executing operations for both cyber defense and offense. The model’s ability to eliminate existing barriers to scaled cyber operations, including automating the entire process of cyberattacks or detecting and exploiting vulnerabilities, poses serious implications for cybersecurity. OpenAI warns that this could upset the current balance, automating and increasing the frequency of attacks.

Altman emphasized that OpenAI will begin with limitations to prevent misuse of its coding models for criminal activities. Over time, the company intends to focus on strengthening defenses and assisting users in addressing security flaws. He argued that the rapid deployment of current AI models is critical for enhancing software security, especially as more powerful models are anticipated in the near future. This sentiment resonates with OpenAI’s ongoing commitment to AI safety, as Altman noted that “not publishing is not a solution either.”

Understanding the “Critical” Level

At the apex of OpenAI’s framework lies the “Critical” level, where an AI model could autonomously discover and create functional zero-day exploits—previously unknown security vulnerabilities—across numerous critical systems without human oversight. This level would also enable the model to devise and execute innovative cyberattack strategies against secure targets with minimal guidance.

The ramifications of a “Critical” level model are profound. Such a tool could autonomously identify and exploit vulnerabilities across various software platforms, potentially leading to catastrophic consequences if utilized by malicious actors. The risk is exacerbated by the unpredictable nature of novel cyber operations, which could involve new types of zero-day exploits or unconventional command-and-control methods.

According to OpenAI’s framework, the exploitation of vulnerabilities across all software could result in severe incidents, including attacks on military or industrial systems, as well as OpenAI’s infrastructure. The threat of unilateral actors finding and executing end-to-end exploits underscores the urgency of establishing robust safeguards and security controls before advancing further development.

As OpenAI prepares for these updates, it highlights the dual nature of its technology—capable of both enhancing cybersecurity measures while simultaneously posing significant risks. The company’s approach reflects a broader industry challenge of balancing innovation with safety. As AI technology evolves, the debate surrounding its ethical use and the importance of regulatory frameworks continues to gain prominence, shaping the future landscape of cybersecurity and AI development.

See also Kim Jaewon to Chair KOSPO, Steering Korea’s Startup Forum Toward AI-Driven Policy Reform

Kim Jaewon to Chair KOSPO, Steering Korea’s Startup Forum Toward AI-Driven Policy Reform Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032 Satya Nadella Supports OpenAI’s $100B Revenue Goal, Highlights AI Funding Needs

Satya Nadella Supports OpenAI’s $100B Revenue Goal, Highlights AI Funding Needs