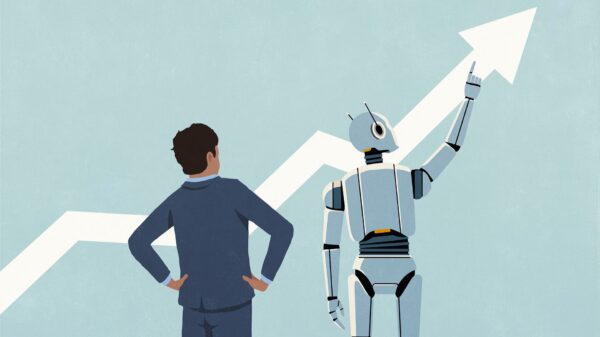

Artificial intelligence (AI) is rapidly becoming the cornerstone of industrial efficiency, a transformation that has evolved significantly since its initial emergence as a digital curiosity. Various sectors across the global economy are experiencing drastic changes as high-speed processing becomes essential. For instance, search engines now leverage AI to summarize complex research papers in an instant, healthcare teams analyze extensive datasets through these systems, and energy companies simulate climate and geological processes to forecast future trends.

Despite significant advancements, user experiences with AI often suffer from slow response cycles. Frustrated users find themselves waiting as responses appear haltingly on screens, rather than flowing naturally. The year 2025 marked pivotal milestones for AI, paving the way for a transformative revolution anticipated in 2026. Addressing this latency challenge, Silicon Valley-based Cerebras is engineering industry-leading inference speeds measured in tokens per second. The speed at which a system can generate tokens—small units of text that constitute every AI response—has become a critical performance metric.

Cerebras’ approach diverges from conventional manufacturing by utilizing the entire silicon wafer, creating a singular, unified computing device. Their latest architecture, the WSE-3, integrates four trillion transistors and 900,000 AI-optimized compute cores onto a single substrate. According to internal documentation, this platform has achieved impressive benchmarks of 2,100 output tokens per second on a 70-billion-parameter model, as recorded by Artificial Analysis. The performance implications of these figures may reshape market dynamics, depending on their alignment with energy efficiency and the demands of real-world workloads.

The tactile experience of interacting with advanced AI systems is increasingly defined by token generation speed, moving beyond what once seemed merely a laboratory curiosity. When a model generates only 20 or 30 tokens per second, responses unfold gradually, whereas outputs measured in the hundreds or thousands of tokens per second approach immediacy. Cerebras has framed its hardware development around this critical threshold. Initially achieving around 450 tokens per second, the company later reported a leap to 2,100 tokens per second under specified benchmarking conditions.

The implications of such advancements can be seen across various applications, affecting voice assistants, real-time translation services, and AI-powered research tools. A notable reduction in delay between question and answer enhances usability significantly, enabling the integration of AI systems into workflows that require near-instant feedback. While measuring tokens per second provides insight into output rate, it does not inherently reflect the intelligence or accuracy of the models being employed. Faster responses enhance usability but do not automatically indicate greater cognitive capabilities.

As the industry undergoes these transformations, understanding the context behind performance measurements becomes crucial. Cerebras emphasizes the importance of aligning testing conditions for valid benchmarking across different hardware providers. Variations in performance are often dictated by factors like decoding methods, concurrency levels, and workload configurations. The company’s internal improvements have positioned it among the fastest publicly reported systems for large model inference, marking a significant milestone in AI hardware development.

Technical Details

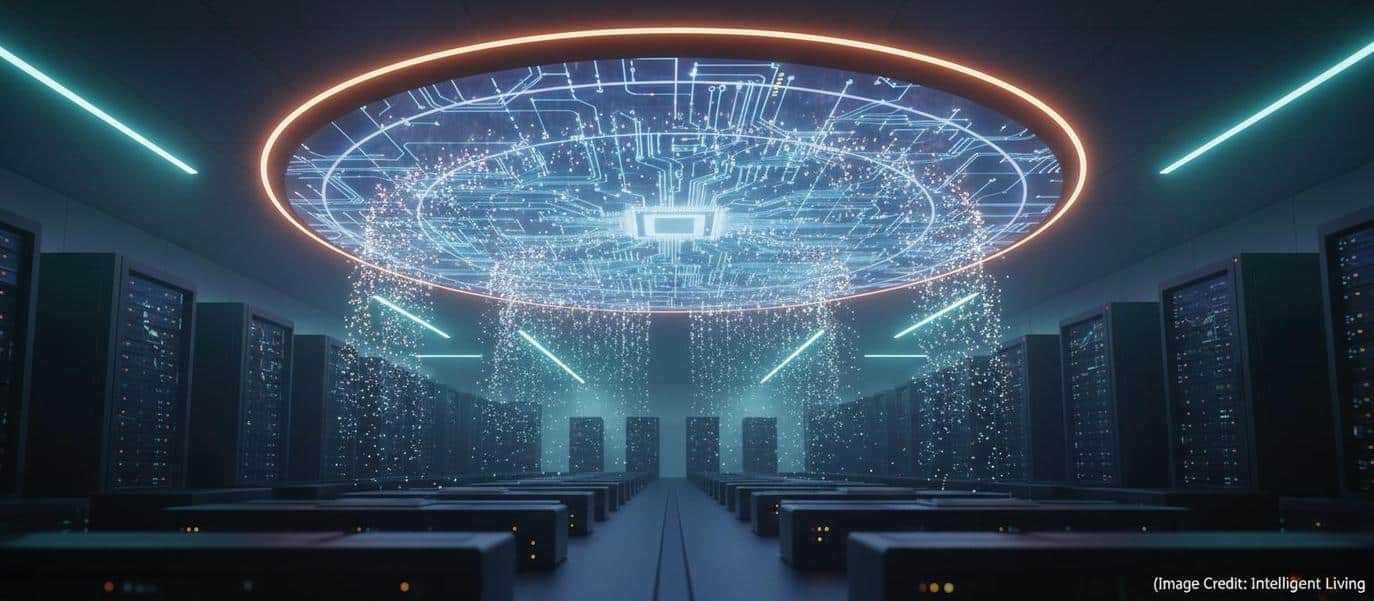

Traditional semiconductor manufacturing typically involves slicing silicon wafers into smaller chips, each functioning as a separate processor with distinct boundaries. This fragmentation poses challenges when large AI models need to be distributed across multiple chips, leading to high-speed interconnects being required to bridge gaps. Cerebras addresses this issue by treating the entire wafer as a single chip, which significantly minimizes the communication bottlenecks that often inhibit distributed AI models. The WSE-3 architecture exemplifies this innovation with its integration of massive on-chip memory, offering 44GB of SRAM and facilitating peak performance of 125 petaflops.

While transistor count often dominates discussions about AI hardware, the true velocity of these systems is ultimately governed by their memory architecture. The WSE-3’s design allows for local access to model parameters, which eradicates latency typically experienced when data must travel between processors and external memory. This efficient on-chip SRAM bandwidth is crucial for sustaining high token output rates under appropriate conditions, especially in large language models where memory movement can become a limiting factor.

Moreover, Cerebras has developed a defect-tolerant architecture to address the yield challenges inherent in large-scale semiconductor manufacturing. By distributing smaller AI cores across the wafer, the system can bypass nonfunctional cores, allowing approximately 900,000 out of roughly 970,000 cores to remain operational. This engineering approach has enabled Cerebras to transition from prototype to product, showcasing the viability of wafer-scale computing.

As the demand for AI continues to soar, the hardware’s credibility must be validated through broad industrial adoption. Cerebras has established partnerships with national laboratories and energy firms, underlining the technology’s real-world applications in drug discovery, climate modeling, and AI-driven consumer platforms. These deployments illustrate that wafer-scale systems are far from experimental; they are actively enhancing productivity across multiple sectors.

Looking ahead, the strategic importance of robust AI infrastructure is becoming increasingly evident. As nations and corporations seek technological sovereignty, collaborations between hardware firms like Cerebras and organizations such as G42 emphasize the necessity for expansive AI supercomputing capabilities. The ongoing exploration of diverse hardware architectures may lead to more resilient and adaptable computing solutions, especially as the global economy leans more heavily on AI-driven technologies.

Ultimately, the rapid advancements in AI hardware, particularly in throughput and efficiency, will likely reshape how industries approach their technological frameworks. As businesses and consumers experience the benefits of faster, more responsive AI tools, the quest for immediacy in artificial intelligence will continue to drive innovation in the field.

See also Efficient Computer Secures $60 Million for Energy-Efficient AI Processor Development

Efficient Computer Secures $60 Million for Energy-Efficient AI Processor Development Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse