Anthropic has launched Claude Code Security, a tool designed to catch security vulnerabilities that conventional scanners miss, leading to a sharp sell-off in cybersecurity stocks.

The newly introduced Claude Code Security is integrated into the Claude Code web interface and is designed to scan codebases for vulnerabilities while suggesting targeted patches. However, the company emphasizes that human oversight is essential, with every proposed fix requiring review. Currently, the tool is in a limited research preview available for Enterprise and Team customers, with open-source project maintainers having the option to apply for free and expedited access.

Traditional analysis tools primarily rely on predefined rules to identify vulnerabilities by matching code against known patterns. While effective for detecting common issues like exposed passwords and outdated encryption, these tools often overlook more intricate flaws such as business logic errors or problematic access controls. Anthropic asserts that Claude Code Security takes a different approach, mimicking the analytical skills of human security researchers by understanding code interactions, data flow, and identifying complex vulnerabilities that rule-based tools tend to miss.

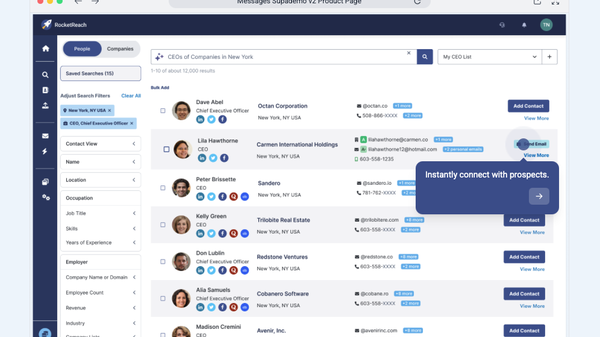

Each vulnerability identified by Claude goes through a rigorous multi-stage verification process before it is presented to analysts. The tool revisits its findings, attempting to confirm or refute them, which helps to filter out false positives. The results, which receive both a severity and a confidence rating, are displayed on a dashboard, allowing teams to assess, inspect proposed patches, and approve fixes. Ultimately, developers maintain final authority over any changes, ensuring that human judgment remains central to the process.

Anthropic claims that the feature is built on over a year of research into Claude’s cybersecurity capabilities, tested by its Frontier Red team through various initiatives, including capture-the-flag competitions and partnerships aimed at defending critical infrastructure. As part of these efforts, the team found over 500 vulnerabilities hidden within production open-source codebases, some of which had gone undetected for decades. The company is currently engaged in triage and responsible disclosure to the relevant maintainers.

Looking ahead, Anthropic anticipates that AI will play an increasingly crucial role in scanning the world’s code, with models improving in their ability to detect long-hidden bugs and security issues. However, the company also warns that attackers are likely to leverage AI to identify exploitable vulnerabilities at an accelerated pace.

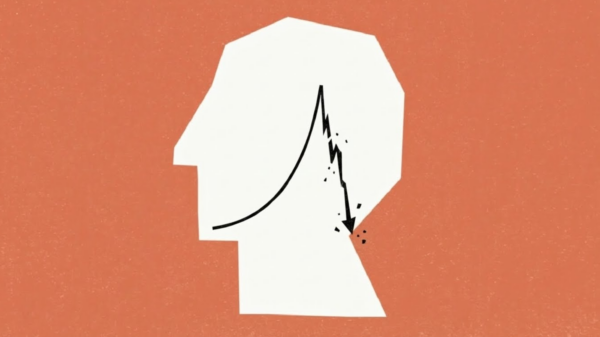

Following the announcement of Claude Code Security, Wall Street reacted negatively, with cybersecurity stocks experiencing notable declines. Bloomberg reported that shares of CrowdStrike fell 8 percent, Cloudflare dropped 8.1 percent, Okta saw a 9.2 percent decrease, and SailPoint declined by 9.4 percent. The Global X Cybersecurity ETF also fell by 4.9 percent, reaching its lowest point since November 2023.

This sell-off aligns with a broader trend; a previous announcement from Anthropic regarding specialized niche plugins for its Cowork platform had already negatively impacted software stocks. Investors are increasingly concerned that the emergence of new AI tools might enable users to develop their own applications, potentially diminishing demand for established software products and challenging growth, margins, and pricing power across the sector.

Despite these worries, it seems unlikely that all companies will suddenly pivot to creating their own security software or other complex applications. The division of labor plays a crucial role in economic efficiency, and without it, the industry could face extreme fragmentation, resulting in countless in-house tools that require extensive maintenance, security updates, and oversight, effectively negating the economies of scale offered by established providers.

A more plausible scenario involves AI tools reducing software production costs sufficiently to enable the creation of niche applications that previously lacked economic viability. Companies may address specific challenges more quickly with custom tools while continuing to rely on proven products for broader needs, which are also evolving to integrate AI features. However, the notion that cheaper development equates to lower operational costs is misleading; maintenance, updates, compliance, support, and integration with existing systems typically account for the majority of IT spending. Even applications built using AI in a matter of hours will necessitate ongoing operation and maintenance.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks