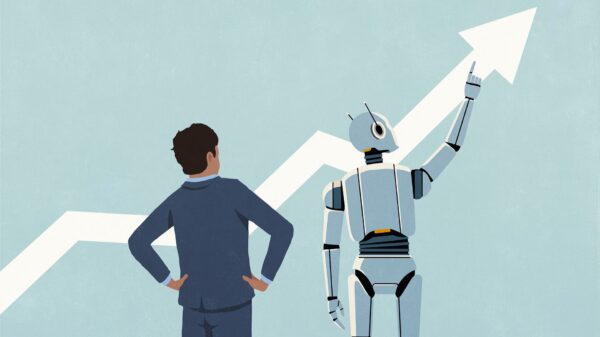

Ethereum cofounder Vitalik Buterin has proposed a radical overhaul of decentralized autonomous organizations (DAOs), advocating for the integration of personal artificial intelligence agents to cast votes on behalf of users. This plan, shared on social media platform X, comes a month after Buterin expressed concerns regarding low participation and the centralization of power within DAOs, suggesting a shift away from the current trend of delegating votes to large token holders.

Buterin’s proposal emphasizes the need for individuals to employ AI models that are tailored to their past communications and personal values, enabling them to engage actively in the myriad decisions that DAOs encounter. He noted, “There are many thousands of decisions to make, involving many domains of expertise, and most people don’t have the time or skill to be experts in even one, let alone all of them.” In his view, deploying personal large language models (LLMs) could effectively address the challenges associated with governance participation.

Central to Buterin’s vision is the assurance of privacy for users. AI agents would function within secure frameworks such as multi-party computation (MPC) and trusted execution environments (TEEs), allowing these agents to process sensitive information without disclosing it on public blockchains. This framework is intended to protect users’ confidential data while still enabling their participation in decision-making processes.

Another critical aspect of the proposal is participant anonymity. Buterin has called for the implementation of zero-knowledge proofs (ZKPs), a cryptographic mechanism that enables users to verify their eligibility to vote without exposing their wallet addresses or the details of their votes. This method could help mitigate risks associated with coercion, bribery, and the tendency for smaller voters to mimic the decisions of large token holders, often referred to as “whale watching.”

The envisioned AI stewards would not only automate routine governance tasks but also highlight critical issues requiring human oversight. This would streamline the decision-making process within DAOs, allowing users to focus on matters of greater significance. To tackle the increasing volume of low-quality or irrelevant proposals, which has become a growing concern as generative AI continues to permeate open forums, Buterin suggests establishing prediction markets. These markets would enable AI agents to assess the likelihood of proposals being accepted, rewarding accurate predictions while penalizing less valuable contributions.

Buterin’s call for privacy-preserving tools extends beyond basic governance. He advocates for advanced mechanisms like MPC and TEEs to enable AI agents to evaluate sensitive data, such as job applications or legal disputes, without the risk of public exposure on blockchains. This would allow DAOs to maintain operational integrity while still harnessing the benefits of decentralized governance.

The implications of Buterin’s proposal could reshape the landscape of decentralized governance, shifting the focus from large token holders to individual users empowered by AI technology. By harnessing personal AI agents, users may find themselves more engaged in DAOs, fostering a more democratic approach to governance. The idea stands as a potential solution to the prevailing challenges of participation and centralization that threaten the foundational ethos of DAOs.

As the conversation around the future of digital governance evolves, Buterin’s insights provide a framework that not only addresses current shortcomings but also anticipates the complexities of a rapidly changing technological landscape. With the integration of AI into governance, the future of DAOs may offer a more equitable and participatory model, reflecting a broader shift towards decentralized decision-making.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health