Ahead of an artificial intelligence conference last April, peer reviewers evaluated submissions from an entity known as “Carl.” Unbeknownst to them, Carl was not a scientific researcher, but an AI system developed by the Autoscience Institute. The company asserts that its model can hasten the pace of artificial intelligence research. In the double-blind peer review process, three of Carl’s four papers were accepted, showcasing the growing role of AI in scientific inquiry.

Carl is part of an emerging cohort of so-called “AI scientists,” which includes systems like Robin and Kosmos, developed by the nonprofit FutureHouse, and The AI Scientist from Sakana AI. These AI scientists are constructed from multiple large language models and are designed not only to generate but also to test ideas and produce findings. Eliot Cowan, co-founder of Autoscience Institute, emphasized that these AI-driven systems can autonomously conduct literature reviews, devise hypotheses, carry out experiments, analyze data, and yield novel scientific outcomes.

The overarching aim, according to Cowan, is to enhance the efficiency of scientific research and significantly increase output. Other companies, such as Sakana AI, echo this belief, suggesting that AI scientists are unlikely to fully replace their human counterparts. However, the rise of automated systems in science has generated a blend of enthusiasm and apprehension among the AI and scientific communities.

“You start feeling a little bit uneasy, because, hey, this is what I do,” remarked Julian Togelius, a professor of computer science at New York University who focuses on artificial intelligence. This sentiment is echoed by critics who worry that AI systems might supplant future researchers, inundate the scientific discourse with subpar or unreliable data, and threaten the credibility of scientific findings. Moreover, David Leslie, director of ethics and responsible innovation research at the Alan Turing Institute, raised concerns about the implications of integrating AI into a field that is inherently social and human in nature.

Indeed, automated technologies have already made notable strides in scientific achievements over the past five years. For example, AlphaFold, an AI system created by Google DeepMind, successfully predicted the three-dimensional structures of proteins with remarkable precision and speed, outperforming traditional laboratory methods. The creators of AlphaFold, Demis Hassabis and John Jumper, were awarded the 2024 Nobel Prize in Chemistry for this groundbreaking work.

As companies expand their use of AI in various facets of scientific discovery, Leslie warns against what he terms “computational Frankensteins”—a convergence of different AI infrastructures and algorithms aimed at simulating complex scientific practices. In 2025 alone, at least three organizations—Sakana AI, Autoscience Institute, and FutureHouse—have heralded their first “AI-generated” scientific results. Additionally, researchers at three federal laboratories in the U.S.—Argonne National Laboratory, Oak Ridge National Laboratory, and Lawrence Berkeley National Laboratory—have developed fully automated materials laboratories driven by AI.

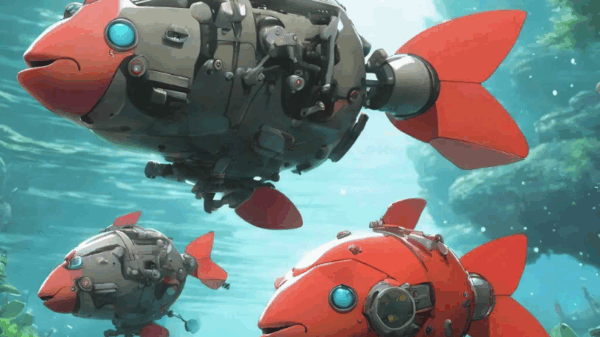

AI systems possess the capability to identify patterns within vast datasets, potentially revolutionizing fields such as material sciences and the physics of subatomic particles. Leslie noted, “They can basically make connections between millions, billions, trillions of variables,” highlighting opportunities in domains where human cognition falls short. For instance, FutureHouse’s Robin successfully mined existing literature to propose a therapeutic candidate for vision loss and devised experiments to validate its efficacy.

Nevertheless, researchers express concerns about the potential pitfalls of AI systems. While Nihar Shah, a computer scientist at Carnegie Mellon University, sees promise in AI facilitating new discoveries, he also warns of “AI slop,” which refers to the influx of low-quality, AI-generated studies flooding the scientific literature. Shah’s recent study evaluated two AI models designed to assist in scientific tasks: Sakana’s AI Scientist-v2 and Agent Laboratory, created by AMD in partnership with Johns Hopkins University. The findings revealed that one AI system inaccurately reported up to 100 percent accuracy on tasks, despite deliberate noise being introduced into the dataset.

Critics argue that generative AI has yet to demonstrate the ability to produce truly innovative ideas. A study found that the AI chatbot ChatGPT-4 generated only incremental discoveries, while another published in *Science Immunology* indicated that AI chatbots struggled to formulate insightful hypotheses in vaccinology. Despite these challenges, Shah maintains that human involvement in research will persist, stating, “Even if AI scientists become super-duper duper capable, still there’ll be a role for people.”

As the landscape of scientific research evolves, experts emphasize the importance of scrutinizing the outputs generated by AI systems. Leslie argues for a reflective approach to understanding the role of AI in scientific rigor, suggesting that journals and conferences audit AI-generated research by tracing the methodologies and code used. Cowan assures that his team is developing systems that uphold ethical standards comparable to traditional human-led experiments.

While the integration of AI systems raises pressing questions about the future of research, Togelius believes it is vital to focus on enhancing scientific capabilities rather than automating human roles out of existence. As the dialogue around AI’s impact on science continues, the challenge will be to harness its potential without compromising the human essence that has historically defined scientific inquiry.

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions