In a significant shift that may reshape the landscape of digital health information, Google’s new AI Overviews are increasingly favoring YouTube videos over established medical authorities like the Mayo Clinic and WebMD for health-related searches. A recent study from the SEO software firm Authoritas indicates that YouTube videos are cited in 16.5% of AI-generated health overviews, raising concerns about the credibility of medical advice provided by artificial intelligence and the strategic implications for Google as a primary information gatekeeper.

The analysis examined 1,000 health-related keywords and found that the National Institutes of Health (NIH) was referenced in 12.1% of cases, while well-known consumer health sites like WebMD and Healthline appeared 10.9% and 9.6% of the time, respectively. This algorithmic tilt toward YouTube could fundamentally alter how millions of users access critical health information, potentially prioritizing less reliable sources over expert-reviewed content.

This reliance on YouTube represents a stark departure from Google’s historical approach to health and financial information, where the company emphasized expertise, authoritativeness, and trustworthiness (E-A-T). Traditionally, Google’s guidelines have favored peer-reviewed studies and content overseen by qualified professionals. Elevating YouTube, a platform characterized by a vast range of content quality—from credible medical experts to wellness influencers promoting unverified remedies—challenges this long-standing standard.

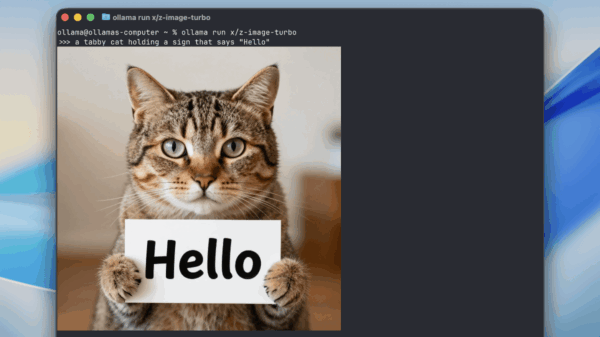

The mechanics of this preference appear to stem from a confluence of elements. Google’s ownership of YouTube allows for seamless integration within its data ecosystem. The extensive library of transcribed video content provides an easily digestible text source for Large Language Models (LLMs) like those driving AI Overviews. This creates a feedback loop where the AI is trained on—and subsequently promotes—content from its own platform, benefiting Google’s financial interests but potentially compromising user accuracy in health matters.

The variability of YouTube content heightens concerns about the trust users place in Google for sensitive health inquiries. While channels from reputable institutions like the Cleveland Clinic offer quality information, they coexist with misleading, anecdotal, or commercially driven content. Consequently, AI Overviews may inadvertently synthesize disparate advice from a registered dietitian and a vlogger endorsing a non-scientific fad diet, presenting it as a singular, authoritative answer.

This issue exacerbates the longstanding warnings from health professionals about the dangers of misinformation in medical contexts. The AI Overview feature appears to introduce a new vector for amplifying such misinformation, alarming many in the SEO community who have dedicated years to optimizing content to align with Google’s rigorous E-A-T standards, only to see a video platform gain precedence.

This trend occurs against the backdrop of previous missteps associated with AI Overviews, including instances where the system provided dangerously erroneous suggestions, such as recommending users add non-toxic glue to pizza sauce. Such blunders, which gained viral attention on social media, prompted Google to acknowledge the system’s limitations and to disable AI summaries for numerous queries temporarily.

The incidents not only highlight the AI’s struggle with nuance and context but also raise significant concerns when applied to health-related information. As noted by The Verge, these errors reveal the model’s tendency toward “hallucinations,” a critical flaw for issuing health advice. With the stakes considerably higher in medical domains, the risk of misleading information becomes a pressing issue.

In response to the backlash, Google has adopted a defensive yet conciliatory stance. In a May 2024 blog post, Liz Reid, Head of Google Search, addressed the problematic answers and admitted that some were related to “real” queries. She announced that the company is implementing updates, including improved mechanisms for detecting nonsensical inquiries and reinforcing safeguards against user-generated forum content in health responses.

Despite these assurances, the systemic preference for YouTube in health searches raises profound questions about Google’s dual role as a commercial entity and a public information utility. Digital health publishers like Healthline, WebMD, and Verywell Health have invested extensively in developing authoritative content, and the rise of AI Overviews that favor videos threatens to undermine their business models while decreasing the quality of health information available online.

For medical practitioners, the implications are equally concerning. Many doctors are already navigating a landscape where patients arrive with misinformation sourced from social media. An AI tool that equates unvetted video content with authoritative advice could further complicate the delivery of evidence-based care, risking the integrity of online medical literacy.

As Google advances its AI capabilities, the company faces critical challenges in balancing business interests with public accountability. The path ahead will test Google’s commitment to ensuring that its AI tools prioritize credible expertise over platform promotion. The implications of this development extend beyond corporate strategy; they could fundamentally redefine how health information is accessed and trusted in an increasingly digital world.

See also David Tepper Exits Oracle, Micron, Intel; Invests in Qualcomm Amid AI Chip Surge

David Tepper Exits Oracle, Micron, Intel; Invests in Qualcomm Amid AI Chip Surge AI Designs First Complete Genetic Blueprint for Virus Targeting Superbugs, Revolutionizing Synthetic Biology

AI Designs First Complete Genetic Blueprint for Virus Targeting Superbugs, Revolutionizing Synthetic Biology World Economic Forum Reveals Critical AI-Workplace Inflection Point Amid Skills Gap

World Economic Forum Reveals Critical AI-Workplace Inflection Point Amid Skills Gap Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT